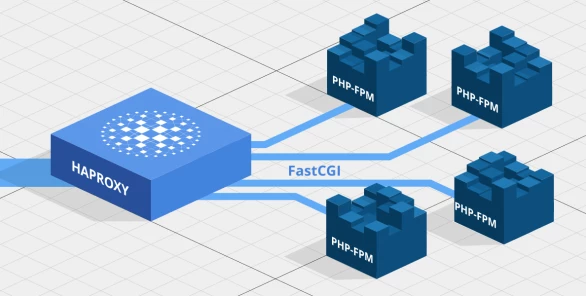

HAProxy now supports the FastCGI protocol, enabling fast, secure, and observable load balancing to PHP, Python, and other dynamic scripting languages. In this post, you will learn how to load balance PHP-FPM applications using HAProxy and FastCGI.

HAProxy version 2.1 introduced support for proxying the FastCGI protocol. For the first time, this means that HAProxy can route requests directly to applications written in dynamic scripting languages like PHP and Python without an intermediary web server to translate the protocol. You can seamlessly integrate dynamic backend applications with regular static pages by using HAProxy’s routing rules. On top of this, HAProxy is designed for the utmost performance, observability and security, which means a better user experience, detailed metrics, and safeguards around your FastCGI app.

FastCGI is a protocol that was developed during the mid-1990s to be a standard scheme for exchanging data between a web server and a backend application. That backend application didn’t have to be a scripted program, although it often was. FastCGI can be used to connect to any compatible program that speaks the protocol, including applications written in C. Today, it’s often used to connect servers like Apache to external PHP programs. Separating PHP into its own process apart from the web server or proxy allows for greater scalability.

FastCGI & Its Precursor, CGI

FastCGI is an application-layer protocol that was built to solve problems people were experiencing when using the older Common Gateway Interface (CGI) protocol. CGI was created during the early 1990s as a standard way for a web server to communicate with scripts, typically Perl scripts. At the time, web servers used various, non-standard ways of communicating with external scripts, making it difficult to write a program that was compatible with different server software. CGI made communication uniform and consistent.

With CGI, whenever a request was received by the web server, a new CGI process was spawned to run the script. The web server passed parameters to the external process via environment variables and got responses via stdout. Although CGI didn’t become an official specification until much later, RFC 3875 gives us a clue about the CGI rationale:

The server acts as an application gateway. It receives the request from the client, selects a CGI script to handle the request, converts the client request to a CGI request, executes the script and converts the CGI response into a response for the client.

The problem with CGI was that it fired up a new process every time a client requested a script. This led to a large amount of memory being used when there were many, concurrent requests. Due to this limitation, its reign as king of dynamic content was soon usurped by FastCGI. Lincoln Stein’s 1999 article, Is CGI Dead?, tells how other technologies competed to steal CGI’s crown:

…a host of CGI alternatives and spin-offs has appeared in recent years. There are persistent CGI variants such as FastCGI, embedded CGI emulators such as mod_perl and Velocigen, server APIs such as ISAPI, and template-driven solutions such as ASP and PHP.

FastCGI had something good going for it. Like CGI, it could invoke a Perl, Python or PHP script and return the output to the server. However, it did not need to create a new process every time to do so. Instead, it spawned the process but kept it running, which allowed it to reuse that same process again and again.

A lot of time has passed since then. What makes FastCGI a viable choice in today’s technology landscape? Primarily, it comes down to the fact that the problem of communicating with a PHP, Python, or Perl script has not disappeared. Raw HTTP requests can not be directly translated into the task of executing a script on the server.

You could, of course, write a Python script that receives HTTP messages directly, but then you would have embedded HTTP server logic into your script and muddled low-level protocol details with your business logic. Such an approach would likely not cover all of the aspects of a true web server, either. It would also mean duplicating this effort across each of your scripts.

Another solution is to install language parsing modules, such as mod_php, into the web server itself. This gives the web server the ability to execute scripts on its own. However, that limits your ability to scale the number of processes running the script independently from the web server. In many cases, it also means that the PHP module is loaded even for HTTP requests that don’t require PHP processing.

The better alternative is to run the script in its own process and leave the task of receiving HTTP requests to the web server. FastCGI allows you to separate the web server (or proxy) and the running script by defining the communication protocol between the two. Performance benchmarks indicate that separating scripts into their own process equates to a boost in performance.

Install PHP-FPM

FastCGI Process Manager (PHP-FPM) is a popular FastCGI implementation for PHP. It handles running a PHP script and receiving FastCGI communication to interact with the script. Traditionally, you would configure Apache to communicate with PHP-FPM via the mod_fastcgi or mod_proxy_fcgi module. However, with HAProxy version 2.1, you can relay requests directly from HAProxy to a running PHP-FPM service.

You can connect HAProxy to a PHP-FPM process either by Unix socket or by TCP/IP. Communicating via a Unix socket avoids any network overhead, but it requires that the PHP-FPM process run on the same server as HAProxy. TCP/IP, on the other hand, lets you run the PHP-FPM process on a different server, but it introduces the potential for network overhead.

First, let’s see how to install PHP-FPM. On an Ubuntu 18.04 server, run the following commands, which install PHP-FPM and a compatible version of PHP. At the time of this writing, it installs version 7.2 of both PHP and the PHP-FPM service.

| $ sudo apt update | |

| $ sudo apt install php-fpm |

Afterward, you can check the version of PHP by passing the --version flag to the php program:

| $ php --version | |

| PHP 7.2.24-0ubuntu0.18.04.1 (cli) (built: Oct 28 2019 12:07:07) (NTS) | |

| Copyright (c) 1997-2018 The PHP Group | |

| Zend Engine v3.2.0, Copyright (c) 1998-2018 Zend Technologies | |

| with Zend OPcache v7.2.24-0ubuntu0.18.04.1, Copyright (c) 1999-2018, by Zend Technologies |

You can also check that the PHP-FPM service is installed and running:

| $ sudo systemctl status php7.2-fpm.service | |

| php7.2-fpm.service - The PHP 7.2 FastCGI Process Manager | |

| Loaded: loaded (/lib/systemd/system/php7.2-fpm.service; enabled; vendor preset: enabled) | |

| Active: active (running) since Tue 2020-01-07 15:38:28 UTC; 49min ago |

As you can see, we did not install a web server such as Apache or NGINX. That’s because we are going to convert HTTP requests to FastCGI directly in HAProxy. This simplifies your PHP application’s server dependencies and reduces latency by removing a hop in the request path.

PHP-FPM runs independently of a web server. Realistically, however, any PHP application will need images, CSS, JavaScript files, and other static resources in addition to PHP files. So, you will likely still need a pool of web servers to do that. However, you can now have your application scale independently of your web servers that handle only static resources. HAProxy becomes the Layer 7 proxy in front of it all, intelligently routing messages.

Configure PHP-FPM

First, create a new folder to host your PHP application’s files. For example, you could create a folder at /var/www/myapp.

| $ sudo mkdir -p /var/www/myapp |

Then browse to that folder and add a file named index.php with the following contents:

| <?php phpinfo(); ?> |

When this PHP file is rendered as an HTML page, it displays various PHP settings configured on your system. This is useful as a test page, but should not be used in production.

Next, navigate into the /etc/php/7.2/fpm/pool.d directory and add a file named myapp.conf. Every configuration file in this directory instructs PHP-FPM to start a new pool. A pool runs worker processes for your application only. So, by adding more pool configuration files, you can host multiple PHP applications. The directives in each file control how many worker processes to create, for example, and the method for communicating with your application. It’s easiest to make a copy of the existing www.conf file:

| $ cd pool.d | |

| $ sudo cp www.conf myapp.conf |

Edit myapp.conf. At the top, you will find the pool name, www, defined between square brackets. Change this to [myapp].

| ; Start a new pool named 'www'. | |

| ; the variable $pool can be used in any directive and will be replaced by the | |

| ; pool name ('www' here) | |

| [myapp] |

Next, set listen to be a Unix socket. This socket file will be created automatically when the application starts:

| ; The address on which to accept FastCGI requests. | |

| listen = /run/php/myapp.sock |

At this point, if you wanted to have PHP-FPM listen on an IP address and port instead of a Unix socket, you would set listen like so:

| listen = 127.0.0.1:9000 |

Save the file and then restart the PHP-FPM service:

| $ sudo systemctl restart php7.2-fpm |

Each configuration file that you add to the pool.d directory creates a new PHP-FPM application. They should each be assigned a different Unix socket or IP address and port combination. When the PHP-FPM service starts, it reads all of the files within that directory and fires up all of the requested applications.

Configure HAProxy

Now that you have the PHP-FPM service running and have configured it to host a PHP application, the next step is to connect it to HAProxy. Install HAProxy version 2.1 or later and edit the file /etc/haproxy/haproxy.cfg. Here is a barebones example that uses FastCGI to communicate with PHP-FPM over a Unix socket:

| global | |

| log /dev/log local0 | |

| user haproxy | |

| group www-data | |

| defaults | |

| log global | |

| mode http | |

| option httplog | |

| option dontlognull | |

| timeout connect 5s | |

| timeout client 50s | |

| timeout server 50s | |

| frontend myproxy | |

| bind :80 | |

| default_backend phpservers | |

| backend phpservers | |

| use-fcgi-app php-fpm | |

| server server1 /run/php/myapp.sock proto fcgi | |

| fcgi-app php-fpm | |

| log-stderr global | |

| docroot /var/www/myapp | |

| index index.php | |

| path-info ^(/.+\.php)(/.*)?$ |

In the global section, it’s necessary to set user and group directives so that HAProxy will have permission to access the Unix socket that was created by PHP-FPM. For example, on Ubuntu 18.04 the Unix socket at /run/php/myapp.sock is assigned a user and group named www-data. By setting HAProxy’s group to this, it will have access.

Next, in the backend phpservers, the line use-fcgi-app php-fpm enables FastCGI and uses the FastCGI settings from the section named php-fpm.

| backend phpservers | |

| use-fcgi-app php-fpm | |

| server server1 /run/php/myapp.sock proto fcgi |

Note that the server line specifies the path to the Unix socket and a proto argument set to fcgi. If you had configured PHP-FPM to listen on an IP address and port, then you would use that here, such as:

| server server1 127.0.0.1:9000 proto fcgi |

The fcgi-app section tunes how HAProxy communicates with the PHP-FPM application.

| fcgi-app php-fpm | |

| log-stderr global | |

| docroot /var/www/myapp | |

| index index.php | |

| path-info ^(/.+\.php)(/.*)?$ |

Here is a summary of these settings:

Directive | Description |

| Sends FastCGI log messages to STDERR. |

| Sets the directory of your PHP script on the remote server. |

| The name of the PHP script to use if no other file name is given in the requested URL. |

| The regular expression HAProxy uses to extract the PHP file name from the rest of the requested URL. |

Restart HAProxy for these changes to take effect. Then you should see the PHP web page displayed when you navigate to the proxied URL. Add as many PHP applications as you would like, defining a new backend and fcgi-app section for each.

Be sure to update your version of PHP-FPM to prevent an attacker from sneaking invisible characters into the URL, leading to a remote code execution vulnerability (CVE-2019-11043). If that’s not possible, you can use HAProxy ACLs to block line-feed and carriage-return characters:

| http-request deny if { path_sub -i %0a %0d } |

Or employ the HAProxy Enterprise WAF with the following ModSecurity rule:

| SecRule REQUEST_URI "@rx %0(a|d)" "id:1,phase:1,t:lowercase,deny" |

Isolate Your Application Inside a Chroot

PHP-FPM supports isolating different PHP applications within their own chroot, or jailed directory, on the system. This is good for security, but it also gives you an added perk. It lets you decouple the location of your PHP files from your proxy. HAProxy won’t need to know where your application’s files are stored on the PHP-FPM server. That will make sense in a moment.

First, configure your PHP-FPM pool to use a chroot. Go to the directory /etc/php/7.2/fpm/pool.d and edit the myapp.conf file. Find the chroot setting and changing its value to be the path to your PHP application’s directory.

| ; Chroot to this directory at the start. This value must be defined as an | |

| ; absolute path. When this value is not set, chroot is not used. | |

| chroot = /var/www/myapp |

Now, when your application starts, PHP will see /var/www/myapp as the root directory of the system. It won’t have access to files outside of this folder. In your HAProxy configuration file, you will set docroot to a forward slash.

| fcgi-app php-fpm | |

| log-stderr global | |

| docroot / | |

| index index.php | |

| path-info ^(/.+\.php)(/.*)?$ |

When PHP-FPM receives the request, it will perceive the root directory to be the /var/www/myapp folder. As you can see, HAProxy no longer needs to know where your PHP files live on the local or remote PHP-FPM server. You can set docroot to a forward slash for all chrooted applications. The pool configuration file’s chroot directive will determine the application’s true directory.

Conclusion

FastCGI provides a way for HAProxy to communicate directly with a scripted program that’s written in a programming language like PHP. By separating static content from scripts using PHP-FPM, you are able to scale them independently. By placing HAProxy in front, you gain high performance, observability and security.

If you enjoyed this post and want to see more like it, subscribe to this blog! You can also follow us on Twitter and join the conversation on Slack.

HAProxy Enterprise combines HAProxy Community, the world’s fastest and most widely used, open-source load balancer and application delivery controller, with enterprise-class features, services and premium support. It is a powerful product tailored to the goals, requirements and infrastructure of modern IT. Contact us to learn more and sign up for a free trial!

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.