A reverse proxy is a server that connects to upstream servers on behalf of users. It usually maintains two TCP connections: one with the client and one with the upstream server. The upstream server can be either an application server, a load balancer, or another proxy / reverse proxy.

Why Use a Reverse Proxy?

A reverse proxy can be used for different purposes:

improving security, performance, and scalability

preventing direct access from a user to a server

sharing a single IP for multiple services

Reverse proxies are commonly deployed in DMZs to give access to servers located in a more secured area of the infrastructure. That way, the reverse proxy can hide the real servers, block malicious requests, and choose a server based on the protocol or application information (IE: URL, HTTP header, SNI, etc…). And, of course, a reverse proxy can act as a load balancer.

Drawbacks When Using a Reverse Proxy

The main drawback when using a reverse proxy is that it will hide the user's IP address because, when acting on behalf of the user, it will use its own IP address to connect to the server. There is a workaround: using a transparent proxy, but this usage can hardly pass through firewalls or other reverse proxies; the default gateway of the server must be a reverse proxy.

Unfortunately, it is sometimes very useful to know the user's IP when the connection comes into the application server. It can be mandatory for some applications, and it can ease troubleshooting.

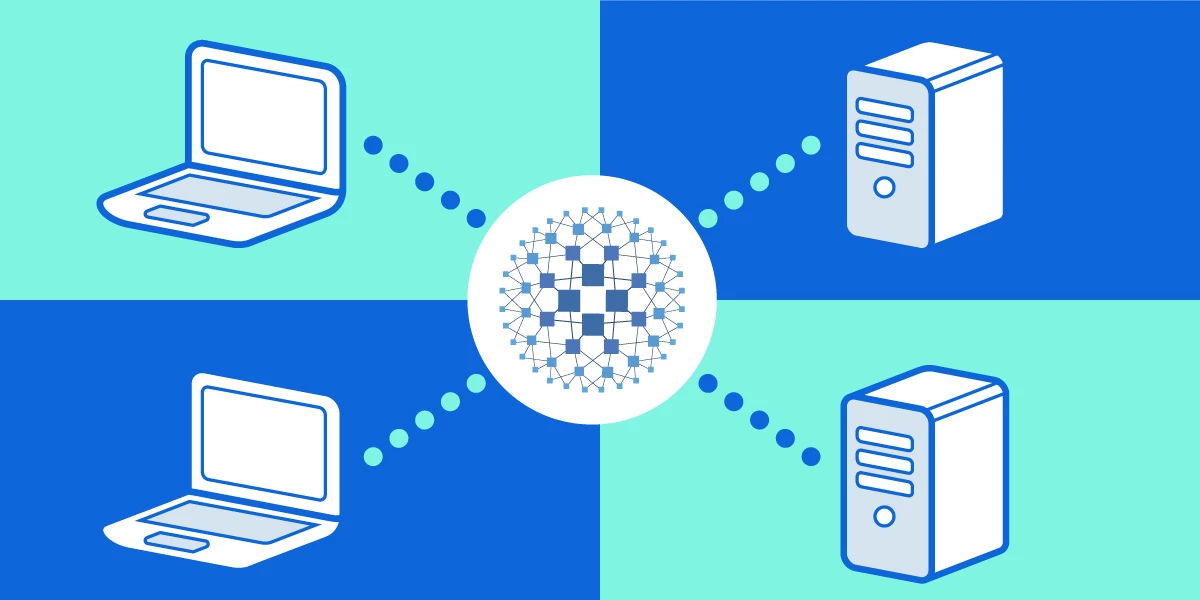

The diagram below shows a common usage of a reverse proxy: it is isolated in a DMZ and handles user traffic. Then it gets connected to the LAN, where another reverse proxy acts as a load balancer.

Here is the request-response flow:

The client gets connected through the firewall to the reverse proxy in the DMZ and sends it its request.

The reverse proxy validates the request, analyzes it to choose the right farm then forwards it to the load balancer in the LAN through the firewall.

The load balancer chooses a server in the farm and forwards the request to it.

The server processes the request and then answers the load balancer.

The load balancer forwards the response to the reverse proxy.

The reverse proxy forwards the response to the client.

Fundamentally, the source IP is modified twice on this kind of architecture: during steps 2 and 3. And, of course, the more you chain load balancers and reverse proxies, the more the source IP will be changed.

The Proxy Protocol

The proxy protocol was designed by Willy Tarreau, an HAProxy developer. It is used between proxies (hence its name) or between a proxy and a server that could understand it.

The main purpose of the proxy protocol is to forward information from the client connection to the next host.

This information is:

L4 and L3 protocol information

L3 source and destination addresses

L4 source and destination ports

That way, the proxy (or server) receiving this information can use it exactly as if the client were connected to it.

Basically, it’s a string the first proxy would send to the next one when connecting to it. For example, the proxy protocol applied to an HTTP request:

PROXY TCP4 192.168.0.1 192.168.0.11 56324 80rn

GET / HTTP/1.1rn

Host: 192.168.0.11rn

rnThere is no need to change anything in the architecture since the proxy protocol is just a string sent over the TCP connection used by the sender to get connected to the service hosted by the receiver.

Now, I guess you understand how we can take advantage of this protocol to pass through the firewall, preserving the client IP address between the reverse proxy (which is able to generate the proxy protocol string) and the load balancer (which is able to analyze the proxy protocol string).

Stud and Stunnel are two SSL reverse proxy software that can send proxy protocol information.

Configuration

#1 Between the reverse proxy and the load balancer

Since both devices must understand the proxy protocol, we will consider both the load balancer and the reverse proxy as Aloha load balancers.

Configuring the reverse proxy to send proxy protocol information

In the reverse proxy configuration, just add the keyword “send-proxy” on the server description line.

Example:

server srv1 192.168.10.1 check send-proxyConfiguring the load balancer to receive proxy protocol information

In the load balancer configuration, just add the keyword “accept-proxy” on the bind description line.

Example:

bind 192.168.1.1:80 accept-proxyWhat’s happening?

The reverse proxy will open a connection to the address binded by the load balancer (192.168.1.1:80). This is not different from a regular connection flow. Once the TCP connection is established, the reverse proxy sends a string with client connection information.

The load balancer can now use the client information provided through the proxy protocol exactly as if the connection had been opened directly by the client itself. For example, we can match the client IP address in ACLs for white/black listing, stick-tables, etc. This would also make the “balance source” algorithm much more efficient.

#2 Between the load balancer and the server

Since the load balancer knows the client's IP, we can use it to connect to the server.

In your HAProxy configuration, just use the source parameter in your backend:

backend bk_app

[...]

source 0.0.0.0 usesrc clientip

server srv1 192.168.11.1 checkThe load balancer uses the client IP information provided by the proxy protocol to connect to the server (the server sees the client IP as the source IP). Since the server will receive a connection from the client's IP address, it will try to reach it through its default gateway. In order to process the reverse NAT, the traffic must pass through the load balancer, which is why the server’s default gateway must be the load balancer. This is the only architecture change.

Compatibility

The kind of architecture in the diagram will only work with Aloha load balancers or HAProxy.