In this presentation, Tobias Haag describes the journey, the challenges, and the successes in building a client-side load balancing solution at Yammer. His team first migrated services to Azure in order to offload networking infrastructure to a trusted cloud provider. They then had three primary goals: improve resiliency and reliability, increase developer velocity, and achieve compliance and security. They built a platform called DHAMM that includes Docker, HAProxy, Azure, Mesos and Marathon.

HAProxy was key to implementing client-side load balancing because it provided routing, health checking and SSL. Their platform runs 60,000 HAProxy instances and they can handle 450,000 request per second at peak load.

Transcript

All right, awesome! So thank you all for having me. It’s a much bigger crowd than I’d anticipated, which is a good sign for HAProxy, I think, and only slightly intimidating; but last night when testing these lights were way more way more scary. So it’s a good thing now. This presentation, I kind of had a rough idea of what I wanted to talk about and I built all the decks and slides and then, basically, over the course of the last presentations everyone has said the same thing. So, I made a couple changes to account for that and instead of giving you the same spiel that has been told over and over again, I’m trying to focus on the few oddities that make it special to Yammer and maybe special at Microsoft as a whole.

So without further ado, this is really about moving us into the cloud, which I think is the first presentation that really talks about using a cloud provider to do all our data center infrastructure work and a little bit of what the experience is, maybe a little bit of an incentive to move to Azure. There’s no… there’s a little bit of a marketing spiel, obviously, but for the most part I’m trying to focus on how HAProxy really helped us make this move fairly easy and for the hard parts, it was pretty much stones that we put in our way ourselves.

Early Days, Data Centers Before 2018

So getting into it, in 2014 or 2013 we started our effort to go into Microsoft data centers out of our current colo that we had in California somewhere; and we had to invent the data space, the data center space, completely from scratch again because that’s what you do when you work in infrastructure software. So, we ended up with something that we called Zeus & Friends. Zeus being the overarching cloud manager that helped us provision VMs or provision servers, manage their network infrastructure, handle the load balancing aspect of things, prepare them for services to run on top of them, and then set up the application stack that we needed to actually run all the things. So we call this Zeus & Friends.

It was written in Java. It handled roughly 2000 servers, on or off, out of which probably 1500 were active at any given point in time. We did a very traditional routing stack that you’ve probably heard in most of the presentations today. So we use IPVS as a layer 4 load balancer and then we drop into HAProxy behind that as the layer 7 magic that ties it all together for us because IPVS, very static, HAProxy relatively static at the time where we had to do a lot of reloading and, you know, the switching between the active connections into a new process that would handle the updates was a little bit of a challenge at the time.

But we managed, nonetheless, with little to no losses, and then we were really keen on figuring out what the container space had to offer at the time and Docker, being in its infancy, we decided to run with LXC, which continues to be a very, very stable platform to run your chrooted containers. But ultimately, we wasted a lot of resources by just doing a one-to-one mapping between server and application container and you just hit the sand boxing around it.

So then, why did we move? For one, scale. We did have a couple of requests to go into other geographies and building out and buying a data center is never really fun to do. Lots of things have to fall into place, you need to get your hardware in order, you need to figure out how to maintain all of this, different teams, different locations; and Microsoft had a process for this, but it was a six-month-plus effort for us and we thought moving to the cloud might take longer, but ultimately we get this instant scale on demand. Wherever Azure goes, we have the ability to have a presence there. So, we can really shape what we need out of Azure that provides us with the most recent CPU’s, the most recent SKUs for, say, network adapters, maybe GPU’s, in the future and we get a massive geographic reach, right?

On top of which, a lot of things that were really a pain for us in the days of the fixed data center that we bought, was mainly around networking. None of us, me included, are really network engineers. We never trained as such and then managing something like BGP route advertisement is intimidating, I would say, and taking all of that out and leaving that up to Azure is a huge boon because we could really focus on what we wanted to do and that was get our users, as well as our developers, a tool at hand that they can use to quickly get their changes out, get them out reliably, make sure that everything is in place, and give us a really good way of handling, say, compliance aspects to the business. Where Microsoft is really big about is the most recent EU governance laws around data handling; means that we have to be closer to our customers with the data. It’s not acceptable necessarily anymore to be only in the US, and with something like our own data centers it’s really hard to make this in a timely fashion.

However, cloud providers…and this was, I think, today…are not the best of things if they don’t work. So, we experienced that here and there. So things break occasionally and unfortunately your uptime is somewhat tied to the cloud providers you choose. In this case, it was only build tools and I guess Google had a tough day in Europe as well today, which is something that really drove our design in terms of how do we deal with resiliency across the stack, rather than figuring out how it can we be as performant as physically possible. And I’m going to follow up with this later. HAProxy really helps us there as well.

Design Considerations

So now, one of the parts that I already went into, considerations on resiliency, we had a couple more.

So “Resiliency and Route Reliability” was one big bucket that we needed to care about, but we also needed to care about “Developer Velocity”. In the past, we had the deploys that were taking, on the order, four to five hours for a single application to roll out because we had to be really careful with the application. We had to drain one container at a time and we really wanted to have deploys that can take seconds in the best case and maybe lower minutes or maybe less than an hour in the worst case; and then, finally, that’s the one thing that really hit us after the acquisition was that compliance and security is real big deal in Microsoft and we can’t be behind that. So, we also focus a lot of energy into that.

So, resiliency and reliability. As I mentioned before, we wanted to be as vanilla as possible in our networking configuration. Let Azure do the heavy lifting. Let them figure out BGP routing, shortest path algorithms and a lot of things I’ve heard about. We just cut that layer out. We don’t want it. We also don’t want to deal with servers that blow up and bad discs or RAID arrays and that fun stuff. We leave it up to Azure to shut the VM down, replace it, get us onto a new host, be done. We really needed this multi-region active-active, which goes back to if your cloud provider has problems in one region, you just fail over to the other region and start serving out of there; and then reactive scale is the key piece that allows us to go into the geographies that we wanted to be in or that we needed to be in to be in the same page as Office365’s other deployments and groups.

So from there, we started coming up with a plan that went towards container orchestration at a different scale than we’ve done it before; and we figured Docker is a big thing and it’s been growing ever since we dropped our first iteration on LXC. Developers have been shouting at us to bring Docker containers, Docker containers. It’s so much better and everything is great with Docker. Which, in my experience, is, “Eh. It’s okay.” It has it’s issues.

But we also wanted to make sure that developers can drop their containers in whatever quantities they really needed it. We wanted to be able to give them the scale they need that Azure gave us on the infrastructure level, which previously it took us probably on the order of two hours if they wanted to really scale up their servers to give them a new server, bootstrap them with the right configuration, DNS entries, networking configuration, HAProxy configurations, before they could even deploy it; and what we wanted to do is once they create their service definition, similar to what you do in Kubernetes and pods, is you just spin up as many as you need, as long as we have capacity still in Azure.

Lots of the other things listed here have been covered, so I’m not going to really go into it. The one thing is the “Automatic recovery on fabric failure”. Cloud providers, because you don’t maintain your own hardware, have this habit of maybe shutting you down randomly, rebooting VMs that, maybe, have critical applications running. So, we wanted to be resilient to that and find a way to…if not completely make us defend ourselves against that…at least be resilient enough that we don’t see much of a customer impact when that happens, ideally none. And I think we got very close to that.

And the last big bucket: Compliance and Security, the most fun bucket. We decided to embrace it a little bit. One of the things is we do SSL across everything. So, be that internal inside the data center, inside the region, SSL encryption has to happen. We wanted the apps to be isolated. Obviously, Docker gives that out of the box, but running unprivileged Docker containers is definitely a no-go. We needed to reduce the need for shell access so people wouldn’t just go into production machines or to production containers and do weird things, and basically just follow the governance law that is being enacted in various locations, predominantly, fortunately, in the EU where they’re on the forefront of passing laws that really make our life slightly complicated. I would say for the better.

Results

So, results. DHAMM: Docker, HAProxy, Azure, Mesos and Marathon. So funnily enough, we’re the second ones that actually used and are still on the ancient technology formerly known as Mesos and Marathon. Now in favor of Kubernetes, very few people know it, but we started at a time when Kubernetes was still in its infancy, similar to where Docker was when we started with our previous infrastructure. It feels like we’re trying to be ahead of times, but always end up slightly behind. So that’s a common theme.

We decided very consciously to use an operating system that is, by nature, extremely restrictive. So there’s no package managers installed; Read-only file systems because we run everything on top of Docker or we build Go binaries that can actually run in these environments and it makes it really easy for us to use Azure’s capabilities and VMs that we can pretty quickly spin up to actually bootstrap these VMs just by the design of the operating system. We also wanted to protect our customers from individual impact of other tenants messing with their…or generating too much traffic in certain regions. So we “celled” our environment into chunks of Azure and Azure networking that allows us to pin certain tenants to certain cells, and with that we can take more outages of fewer customers actually noticing it. And last, but not least, we always run an active-active pair so we have always two regions active and whenever there’s a service outage of even a single service, we can pretty seamlessly fail over to the other side of things.

All right. So, application routing. And this is, I think, the big overarching theme of all the container orchestration that is going on right now. Because you’re such a dynamic environment, how do you actually get away with routing your applications to the correct instances or have your dependencies route to the correct instances? So you basically build a discovery mechanism. Lots of people use Consul. There’s the…whatchamacallit? Kubernetes load balancer? Ingress controller. There it goes! And in Marathon and Mesos at the time, there was this thing called Marathon LB, which was basically a Python script that either instrumented NGINX or HAProxy with a template that you then have to manually reload, which was a bit of a joke. So it was very, very early on. So we ended up designing our own, obviously.

So that’s our previous stack. Customer’s getting routed to, say, Service A. That sits in front of an LVS IPVS node with a HAProxy in the backend and then it traditionally just routes to a couple of the VMs. The VMs have downstream dependencies. They basically just bubble up to the stack, to the LVS load balancer, and then route to the next load balancer; and obviously, that’s a massive single point of failure for us.

And that was the change we made to the new system. We ended up saying that we really wanted to do client-side load balancing wherever we can get away with it and avoid this going up to the edge and bubbling back down again, but we also wanted to avoid direct service-to-service communication because it has a lot of implications for what the service team has to actually own. So we didn’t want to have every single service implement some form of Consul API because it’s just a lot of work. If you have lots of teams working on lots of services…we’re running on the order of 150 microservices on this…if you have various languages you need to support, it’s always a headache and turns out my team decided that we just use HAProxy for all of this.

So, as I mention early challenges here, service discovery is high up there, but once you start doing client-side load balancing you have to consider what that means for your health checking logic as well. Say I run a health check once every second to every backend that I have, but now I have a backend on every single server that I’m running physically that has a downstream dependency to something else. All of a sudden, I’m magnifying my health checks to a scale of tens to hundreds, thousands per second depending on how many instances you have and because you can scale your Docker containers to be extremely small, say one CPU on a sixteen-core machine. You can run hundreds if not thousands of them that you need to health check.

What a lot of other people have done in the past, and I heard it here before as well, is to just offload this to another system. But we wanted, also, to avoid bringing in more and more systems. So, you don’t see Consul here or any other more homegrown system because we wanted to keep it as simple as we could get away with. And then, zero-downtime deployment, I think, is one of the bigger ones that no other container orchestrator gives you out of the box and we needed to find a way to do the same thing with HAProxy, ultimately.

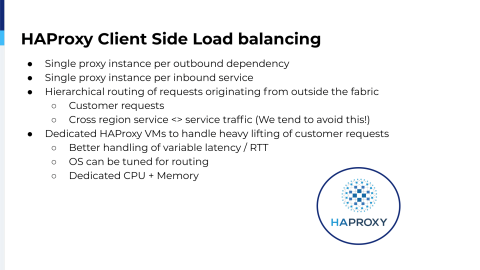

The way we ended up is we basically run a single proxy instance per outbound dependency. So if an application says, “I need to talk to a service”, we spin up an HAProxy instance on the local machine where an instance of that container runs. Additionally, for every single instance running, we also create an inbound load balancer instance; and that all cobbles themselves together in order to make this fabric work for us.

So at this point, we’re covered on the internal communication stream, but we still haven’t routed a request from the client’s end, and for that we just use what works and that’s the typical hierarchical edge load balancing. Why change a running system if you don’t have to. So here, we again reused what we had in the previous data center: just get some HAProxy VMs dedicated to HAProxy instances that basically know where all the inbounds are distributed and then, in this case, we can optimize for low latency request routing. We can tune the TCP stack a lot better.

So, next we needed a tool that I termed mostly load balancer orchestration, which an Ingress Controller is a good example of that. But at the time, again, there wasn’t anything there. So we wrote something called Lodbrok, which is a Viking king or something like that. I don’t know. We didn’t come up with the name, but we had various gods/deities in the past, so Norse sounded to be the next thing. It basically handles the service mesh aspect of our environment as well as acting as a load balancer configuration agent that maintains and controls all the different configurations that we have, life cycle of HAProxy, reloads, socket updates, that kind of stuff. We basically have it listen to Marathon applications popping up and out of existence as we go. So, very similar to previous talks again on how you discover your applications.

But instead of tying that to the routing aspect of things, we actually augmented with some additional metadata. We look locally on the machine for a Lodbrok agent to see if Mesos tasks are actually starting and only then do we introspect and see what is the metadata we need to pull out to make the routing layer start acting. And once we have all this data, we just shove it into Zookeeper because when you run Mesos, you have Zookeeper out of the box. And it turns out it can withstand a lot of punishment before it breaks, and at that point every Lodbrok everywhere in our cluster will start getting the updates and start making their routing updates.

We kind of misused or maybe used…not sure what the developers of Marathon thought…but there’s a metadata field in Marathon for every application they call “labels”; and with that we inject and let service developers inject a lot of configuration around load balancing. So, for example, they can decide whether it’s a TCP or an HTTP load balancer. Should we use load balancing algorithm leastconn? Round robin? Hashing and whatnot? And basically these labels help us augment the experience and the developer can change that on a deploy rather than us going out and changing individual load balancers and worrying about massive configuration sprawl everywhere. And at the end of the day, it’s really as simple as having a Golang template that applies a configuration of an HAProxy configuration with all these parameters, munges it together, and then basically handles it in either a reload or just a socket update of anything that can be dynamically updated.

This is a little bit what it looks like. So, it still looks fairly traditional and I got another picture that might show that a little bit better. So, I’m skipping right ahead.

So this is what we ended up with. I’m now going into the security and compliance phase of things. Now that we have the routing parts in place, we know how we communicate with applications. We kind of needed to fix a problem on how do we ensure SSL across the stack? One of the things you can do is you can do something to have every application enforce SSL, handle their own SSL certificates, manage them, rotate them and whatnot. We figured that’s, again, a lot of overhead on her services team. So we can probably do it ourselves.

We only have to worry about SSL once you leave the physical VM, so we might as well use a pseudo tunnel and misuse HAProxy in that fashion. So, the idea here is every single HAProxy, as I mentioned, has an outbound and an inbound, and we can actually have the outbound HAProxy listen on a local port only and listen only on HTTP or pure TCP. And then the services, unaware that they’re actually talking encrypted, will just talk to an HAProxy over the protocol that they expect, which mostly is HTTP. And then HAProxy there terminates the connection into the next destination host via SSL automatically, decoding the package on the receiving end and sending it as a normal HTTP or TCP packet back into the container of the downstream dependency.

That has a couple of major benefits for us. I think it was mentioned a couple of times that the closer you get to your customer and terminating SSL and then using long-lived connections, the less you have to worry about the latency that this introduces, the renegotiation, handshakes; and yes, we’re in a data center, things are much, much closer together, but it turns out this is still a lot of overhead that you have to deal with.

So, in an environment like ours where we have a lot of services that share the same dependencies…there’s a couple of core services that everyone seems to talk to…we only have to spin up one HAProxy of that and whatever container talks to it, be that from a different application or same application, can actually make use of the reuse of the existing backend connections. And with that, we amortize a little bit of the SSL negotiation cost that it still adds into your environment if you really want to do SSL termination everywhere instead of just your customers coming in.

All right, so how do we end up doing it? The way Lodbrok was designed is, we went with several modules of functionality that we thought was really helpful for us thinking conceptually about how we structure our design. So, there’s a Mesos agent that only runs where Mesos agents are currently running, which we use to discover any new Docker container that starts up. As a Marathon agent, that basically pulls all the state from Marathon and sees what is new. Is it a new application that we haven’t seen before? Do we need to remove an application? That kind of stuff. And then we have something called an Ingress Agent, which is literally our edge load balancer, in the HAProxy sense, in Azure that listens for everything, for every application that has any activity it will basically fetch that data and make sure that we route from our dedicated HAProxies to these downstream instances.

As I mentioned, we also get Zookeeper basically for free in a Mesos environment. So, we made this decision of using Zookeeper. I got a couple numbers at the end that will show how much of a beating you can put on Zookeeper before it breaks and it works really well. It has this Zookeeper Watches where you can register for certain paths in Zookeeper’s data store for events and it will then notify individual instances that something has changed. So that event-driven system helps us to very quickly realize that an application has stopped or started and while we rely very heavily on HAProxy to detect that an application has gone away much quicker than Zookeeper can inform us, it helps us to be eventual consistent in our HAProxy configurations in our routing stack.

So, we kind of layered everything to an extent. We were wanting to fail as stale as possible because, we know, we would be failing. I think in our industry, it’s kind of unavoidable. You write bugs, things fail at the worst possible times, so just embrace it and make sure that your environment naturally recovers and Lodbrok has very many facets of this to kinda embrace it rather than be terrified of it.

Once we have updates coming through, Lodbrok basically just figures out: Do we need to do a change that requires an entire process reload of HAProxy or can we use the socket API? So, an instance got scaled up or if an application got scaled up in more instances, the only thing we have to do is take the socket API and write a couple new backends in, give it the right ports, and everything is happy once the health check works. In cases of, say, the change of load balancing strategy, TCP and HTTP mode…I’m not sure why you would ever do that and change that during runtime, but things like these we have to just write a reload and that’s the single place where we really don’t have the zero downtime deploy sorted out. So, whenever someone makes a change of that scale we still lose a few packets here and there, but overall if it’s just scaling up and down we’re basically back to zero downtime deploys; and yeah the developers basically have the freedom to just deploy the Marathon code and we have extensive pipelines that allow you to define exactly what you want and, once your deploy is complete, you can be fairly confident that the changes are propagated around all the load balancers within usually 30 to 60 seconds at the most.

All right, here is a quick example of how some of our configurations look like.

| defaults | |

| mode {protocol} | |

| balance {balanceMode} | |

| timeout client {clTO} | |

| timeout connect {cTO} | |

| timeout server {sTO} | |

| timeout http-keep-alive {kaTO} | |

| listen ::{service}-{servicePort} | |

| acl down nbsrv(hc-{servicePort}) lt 1 | |

| monitor fail if down | |

| monitor-uri /hc | |

| option httpchk GET /hc | |

| bind {vmIP}:{servicePort} ssl crt {crt} ciphers {cipherList} | |

| server {dockerID} {vmIP}:{containerPort} check port {containerPort} observe layer4 on-error mark-down fail 2 rise 1 |

It’s fairly vanilla. Again, this is a very common theme and I think one thing that resonated, during the keynote that William gave this morning is, simplicity has a lot of major benefits. So, the simpler you can be, the more performant you can be, the more reliable you’ll probably be. And a lot of this thought process went into all our designs as well. We’re trying to be as simple as we can get away with and only add complexity when it’s really necessary. So here, really simple. Everything in curly braces is basically a template-ized parameter. We use SSL on inbound, as I mentioned. We put the ciphers together, run a couple of ACLs. One thing that pops out here I hope is the monitor fail if down and monitor-uri /hc. I mentioned that health checks can become a bit of a problem for HAProxy or for downstream applications, especially if you run a Ruby stack, which doesn’t deal well with concurrent requests at all. So, we tried various ways of handling that, but in the end, the monitor uri and monitor fail if down statements or options in HAProxy helped us offset and offload some of the health checking logic onto the HAProxies that act as inbounds. So, if an outbound that really wants to know if all the backends are up makes a health check, it actually just talks to this path on the inbound HAProxy configuration, which then reports with the health check; and then we offload the health check inside HAProxy to check for the number of servers being down. We actually in recent times added another backend that explicitly has a couple of changes to how we do health checks to be a little bit more responsive. So we might actually check several backends and some of them might not ever get traffic just to get around some of the intricacies of the problem, but we end up having technically only a handful of requests. So, every inbound configuration…if you have like three or four containers of the same type, you will basically send three or four health checks to the application. Every application gets one based on the check frequency and the application never sees more than that. We still shoot an enormous amount of health checks through the system, but it turns out HAProxy is entirely capable of handling all of that because it basically responds instantaneous…near instantaneous.

| listen ::{service}-{servicePort} | |

| load-server-state-from-file global | |

| bind {dockerBridgeIP}:{servicePort} | |

| option httpchk GET /hc | |

| server-template appserver- 1-20 10.0.0.1:{servicePort} ssl check-ssl check port {servicePort} fall 2 observe layer4 on-error mark-down disabled |

So that’s the call out on the inbounds, and on the outbounds the only thing that is really different is that the we…I think it was two years ago when it was released. Was it 1.8 that introduced server templates? Not entirely sure. Don’t hold me to it. But introducing…the introduction of server templates really changed our game where, basically, we’ve always reload, always had some non-zero downtime issues. We now were able to use server templates and we predefined usually the amount of…twice the amount of…backends that an application requests on Marathon, just to have some headroom.

So if you scale up, we have a couple of backends left to not need a reload and then we basically start everything as disabled and go through the socket API to list all the IP addresses and ports that we really need to balance to and then apply it. So that allows us, really, when a change of application instance counts comes along, whether that be through failure or deployments scaling up down action, we can basically just go with a really quick socket call and go, “You’re down now. You go in drain mode, and once you’re drained you basically get forgotten about until the next change needs to happen.” One thing to point out here as well is that everything is basically ssl check-ssl. So, any time we leave a machine, we basically guarantee that you’ll be SSL encrypted.

All right, I mentioned most of these already, let me just double check if I didn’t miss one. Perfect. One of the things, and I think it’s been said before, HAProxy is a very performant load balancer. So that’s what drove our initial decision because we had really good experience with that. The one thing I would like to add to that, I think, is since we started in 2015, it’s come a long way in terms of how easy it is to configure, which parts are easy to configure, and I think that made a major change in making this kind of environment really manageable for us and maintainable for us.

So, just a couple of stats. I think we’ve got 10 minutes left. Right now with this entire deployment, we run across four geos, two continents. We run 2000 Lodbrok agents, but we actually run 60,000 HAProxy instances. I think that’s, at some point that’s almost as many as we get requests per second so it might be a little bit overkill, but it’s just the nature of running it; and it turns out that HAProxy, just spinning it up is extremely cheap for us. The amount of overhead that we get compared to our applications is almost negligible. In steady state, HAProxy doesn’t use any CPU or any noticeable CPU. Since we’re not plagued by this, we run thousands of certificates that need to be shared across processes. The memory overhead is also almost negligible and, in the end, we end up running 26,000 Docker containers across our stack.

Zookeeper. We’ve got four Zookeeper clusters, three nodes each, and they peak at around 150,000 requests per second, which is a lot going read and write operations into Zookeeper. Obviously, most of those are reads. If all of those were writes I think even Zookeeper would crumble. But it goes to show that Zookeeper is a solid, solid piece of technology that, as long as you stay under one megabyte of data, you’re probably fine.

Just from our point of view we are internally…because we run microservices and the way they are designed we have a large amount of fanout. So we run 450,000 requests a second at peak, internal and external combined, but we only run about 80-100,000 requests from clients. So, the real heavy lifting for HAProxy comes from our internal fanout. And that pretty much sums it up. I would say I blame Ruby for that. All right. That sums it.