Recently, there have been some attacks against a website that aimed to steal user identities. In order to protect their users, major website owners had to find a solution. Unfortunately, we know that sometimes, improving security means downgrading performance.

SSL/TLS is a popular way to improve data safety when data is exchanged over a network. SSL/TLS encryption is used to crypt any kind of data, from the login/password on a personal blog service to a company extranet passing through an e-commerce caddy. Recent attacks showed that to protect users' identities, all traffic must be encrypted.

Note, SSL/TLS is not only used on websites but can be used to encrypt any TCP-based protocol like POP, IMAP, SMTP, et.

Why This Benchmark?

At HAProxy Technologies, we build load balancer appliances based on a Linux kernel, LVS (for layer 3/4 load-balancing), HAProxy (for layer 7 load balancing), and stunnel (SSL encryption), for the main components.

Since SSL/TLS is fashion, we wanted to help people ask the right questions and to do the right choice when they have to bench and choose SSL/TLS products.

We wanted to explain to everybody how one can improve SSL/TLS performance by adding some functionality to SSL open-source software.

Lately, on the HAProxy mailing list, Sebastien introduced us to stud, a very good, but still young, alternative to stunnel. So we were curious to bench it.

SSL/TLS Introduction

The theory

SSL/TLS can be a bit complicated at first sight.

Our purpose here is not to describe exactly how it works, there are useful readings for that:

the TLS RFCs: TLS 1.0: rfc2246, TLS 1.1: rfc4346, TLS 1.2: rfc5246.

the TLS Wikipedia page: http://en.wikipedia.org/wiki/Transport_Layer_Security

SSL main lines

Basically, there are two main phases in SSL/TLS:

the handshake

data exchange

During the handshake, the client and the server will generate three keys that are unique for the client and the server, available during the session life only and will be used to crypt and uncrypt data on both sides by the symmetric algorithms.

Later in the article, we will use the term “Symmetric key” for those keys.

The symmetric key is never exchanged over the network. An ID, called SSL session ID, is associated to it.

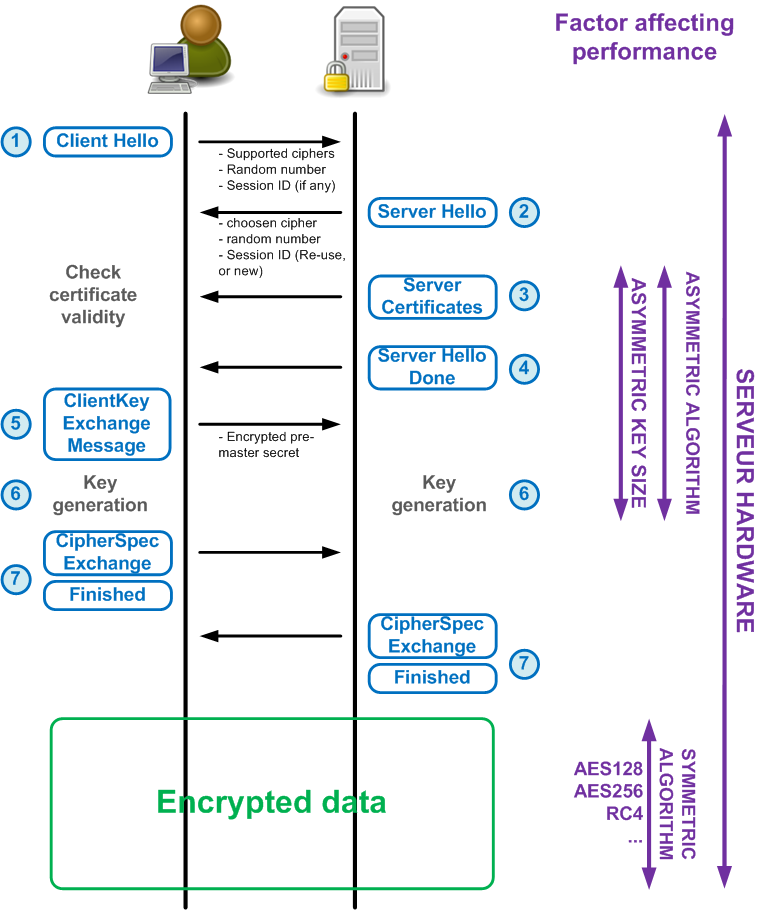

Let’s have a look at the diagram below, which shows a basic HTTPS connection, step by step:

We also represented on the diagram the factor which might have an impact on performance.

The client sends to the server the Client Hello packet with some random numbers, its supported ciphers, and an SSL session ID in case of resuming the SSL session

The server chooses a cipher from the client cipher list and sends a Server Hello packet, including a random number.

It generates a new SSL session ID if the resume is not possible or available.The server sends its public certificate to the client, and the client validates it against CA certificates.

==> Sometimes, you may have warnings about self-signed certificates.The server sends a Server Hello Done packet to tell the client he has finished for now

The client generates and sends the pre-master key to the server

Client and server generate the symmetric key that will be used to crypt data

Client and server tell each other the next packets will be sent encrypted

Now, data is encrypted.

SSL Performance

As you can see in the diagram above, some factors may influence SSL/TLS performance on the server side:

the server hardware, mainly the CPU

the asymmetric key size

the symmetric algorithm

In this article, we’re going to study the influence of these 4 factors and observe their impact on performance.

A few other things might have an impact on performance:

the ability to resume an SSL/TLS session

symmetric key generation frequency

object size to the crypt

Benchmark Platform

We used the platform below to run our benchmark:

The SSL server has purposely much less capacity than the client in order to ensure the client won’t saturate before the server.

The client is inject + stunnel on client mode. The web server behind HAProxy and the SSL offloader is httpterm.

Some results were checked using httperf and curl-loader, and the results were similar.

On the server, we have 2 cores, and since we have enabled hyperthreading, we have 4 CPUs available from a kernel point of view. The e1000e driver of the server has been modified to be able to bind interrupts on the first logical CPU core 0. Last but not least, the SSL library used is Openssl 0.9.8.

Benchmark Purpose

The purpose of this benchmark is to:

Compare the different ways of working of stunnel (fork, pthread, ucontext, ucontext + session cache)

Compare the different ways of working of stud (without and with session cache)

Compare stud and stunnel (without and with session cache)

Impact of session renegotiation frequency

Impact of asymmetric key size

Impact of object size

Impact of symmetric cypher

At the end of the document, we’re going to give some conclusions as well as some pieces of advice. As a standard test, we’re going to use the following:

Protocol: TLSv1

Asymmetric key size: 1024 bits

Cipher: AES256-SHA

Object size: 0 byte

For each test, we’re going to provide the transaction per second (TPS) and the handshake capacity, which are the two most important numbers you need to know when comparing SSL accelerator products.

Transactions per second: the client will always re-use the same SSL session ID

Symmetric key generation: the client will never re-use its SSL session ID, forcing the server to generate a new symmetric key for each request

1. From the Influence of Symmetric Key Generation Frequency

For this test, we’re going to use the following parameters:

Protocol: TLSv1

Asymmetric key size: 1024 bits

Cipher: AES256-SHA

Object size: 0 byte

CPU: 1 core

Note that the object is void because we want to measure pure SSL performance.

We’re going to bench the following software:

STNL/FORK: stunnel-4.39 mode fork

STNL/PTHD: stunnel-4.39 mode pthread

STNL/UCTX: stunnel-4.39 mode ucontext

STUD/BUMP: stud github bumptech (github: 1846569)

Symmetric key generation frequency | STNL/FORK | STNL/PTHD | STNL/UCTX | STUD/BUMP |

|---|---|---|---|---|

For each request | 131 | 188 | 190 | 261 |

Every 100 requests | 131 | 487 | 490 | 261 |

Never | 131 | 495 | 496 | 261 |

Observation:

We can clearly see that STNL/FORK and STUD/BUMP can’t resume a SSL/TLS session.

STUD/BUMP has better performance than STNL/* on symmetric key generation.

2. From the Advantage of Caching SSL Session

For this test, we have developed patches for both stunnel and stud to improve a few things.

The stunnel patches are applied on STNL/UCTX and include:

listen queue settable

performance regression due to logs fix

multiprocess start up management

session cache in shared memory

The stud patches are applied on STUD/BUMP and include:

listen queue settable

session cache in shared memory

fix to allow session to resume

We’re going to use the following parameters:

Protocol: TLSv1

Asymmetric key size: 1024 bits

Cipher: AES256-SHA

Object size: 0 byte

CPU: 1 core

Note that the patched version will be respectively called STNL/PATC and STUD/PATC in the rest of this document.

The percentage highlights the improvement of STNL/PATC and STUD/PATC respectively over STNL/UCTX and STUD/BUMP.

Symmetric key generation frequency | STNL/PATC | STUD/PATC |

|---|---|---|

For each request | 246 | 261 |

Every 100 requests | 1085 | 1366 |

Never | 1129 | 1400 |

Observation:

obviously, caching SSL session improves the number of transactions per second

stunnel patches also improved stunnel performance

3. From the Influence of CPU Cores

As seen in the previous test, we could improve TLS capacity by adding a symmetric key cache to both stud and stunnel.

We still might be able to improve things :).

For this test, we’re going to configure both stunnel and stud to use 2 CPU cores.

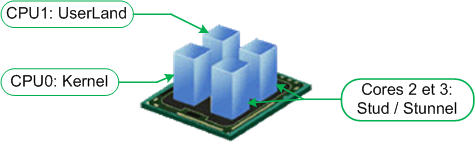

The kernel will be configured on core 0, userland on core 1 and stunnel or stud on cores 2 and 3, as shown below:

For the rest of the tests, we’re going to bench only STNL/PTHD, which is the stunnel mode used by most Linux distributions, and the two patched STNL/PATC and STUD/PATC.

For this test, we’re going to use the following parameters:

Protocol: TLSv1

Asymmetric key size: 1024 bits

Cipher: AES256-SHA

Object size: 0 byte

CPU: 2 cores

The table below summarizes the number we get with 2 cores and the percentage of improvement with 1 core:

Symmetric key generation frequency | STNL/PTHD | STNL/PATC | STUD/PATC |

|---|---|---|---|

For each request | 217 | 492 | 517 |

Every 100 requests | 588 | 2015 | 2590 |

Never | 602 | 2118 | 2670 |

Observation:

Now, we know the number of CPU cores has an influence. 😉

The symmetric key generation has doubled on the patched versions. STNL/FORK does not take advantage of the second CPU core.

We can clearly see the benefit of SSL session caching on both STNL/PATC and STUD/PATC.

STUD/PATC performs around 25% better than STNL/PATC.

Note that since STNL/FORK and STUD/BUMP have no SSL session cache, no need to test them anymore.

We’re going to concentrate on STNL/PTHD, STNL/UCTX, STNL/PATC and STUD/PATC.

4. From the Influence of the Asymmetric Key Size

The default asymmetric key size on the current website is usually 1024 bits. For security purposes, more and more engineers now recommend using 2048 bits or even 4096 bits.

In the following test, we’re going to use observe the impact of the asymmetric key size on the SSL performance.

For this test, we’re going to use the following parameters:

Protocol: TLSv1

Asymmetric key size: 2048 bits

Cipher: AES256-SHA

Object size: 0 byte

CPU: 2 cores

The table below summarizes the number we got with 2048 bits asymmetric key size generation and the percentage highlights the performance impact compared to the 1024 bits asymmetric key size, both tests running on 2 CPU cores:

Symmetric key generation frequency | STNL/PTHD | STNL/PATC | STUD/PATC |

|---|---|---|---|

For each request | 46 | 96 | 96 |

Every 100 requests | 541 | 1762 | 2121 |

Never | 602 | 2118 | 2670 |

Observation:

The asymmetric key size has only an influence on a symmetric key generation. The number of transaction per second does not change at all for the software which is able to cache and re-use SSL session id.

Passing from 1024 to 2048 bits means dividing by 4 the number of the symmetric keys generated per second in our environment.

On average traffic with renegotiation every 100 requests, stud is more impacted than stunnel but it performs better anyway.

5. From the Influence of the Object Size

If you read carefully the article since the beginning, then you might be thinking “they’re nice with their test, but their objects are empty… what happens with real objects?”

So, I guess it’s time to study the impact of object size!

For this test, we’re going to use the following parameters:

Protocol: TLSv1

Asymmetric key size: 1024 bits

Cipher: AES256-SHA

Object size: 1 KByte / 4 KBytes

CPU: 2 cores

Results for STNL/PTHD, STNL/PATC and STUD/PATC:

The percentage number highlights the performance impact.

Symmetric key generation frequency | STNL/PTHD | STNL/PATC | STUD/PATC | |||

|---|---|---|---|---|---|---|

1KB | 4KB | 1KB | 4KB | 1KB | 4KB | |

every 100 requests | 582 | 554 | 1897 | 1668 | 2475 | 2042 |

never | 595 | 564 | 1997 | 1742 | 2520 | 2101 |

Observation

the bigger the object, the lower the performance…

STUD/PATC performs 20% better than STNL/PATC

STNL/PATC performs 3 times better than STNL/PTHD

6. From the Influence of the Cipher

Since the beginning, we run our bench only with the cipher AES256-SHA.

It’s now the time to bench some other cipher:

first, let’s give a try to AES128-SHA, and compare it to AES256-SHA

second, let’s try RC4_128-SHA, and compare it to AES128-SHA

For this test, we’re going to use the following parameters:

Protocol: TLSv1

Asymmetric key size: 1024 bits

Cipher: AES256-SHA / AES128-SHA / RC4_128-SHA

Object size: 4Kbyte

CPU: 2 cores

Results for STNL/PTHD, STNL/PATC, and STUD/PATC:

The percentage number highlights the performance impact on the following cipher:

AES 128 ==> AES 256

RC4 128 ==> AES 128

Symmetric key generation frequency | STNL/PTHD | STNL/PATC | STUD/PATC | ||||||

|---|---|---|---|---|---|---|---|---|---|

AES256 | AES128 | RC4_128 | AES256 | AES128 | RC4_128 | AES256 | AES128 | RC4_128 | |

every 100 requests | 554 | 567 | 586 | 1668 | 1752 | 1891 | 2042 | 2132 | 2306 |

never | 564 | 572 | 600 | 1742 | 1816 | 1971 | 2101 | 2272 | 2469 |

Observation:

as expected, AES128 performs better than AES256

RC4 128 performs better than AES128

stud performs better than stunnel

Note that RC4 will perform better on big objects since it works on a stream while AES works on blocks.

Related articles:

SSL performance: Conclusion

1.)Bear in mind to ask the 2 numbers when comparing SSL products:

the number of handshakes per second

the number of transactions per second (aka TPS).

2.) If the product is not able to resume SSL session (by caching SSL ID), just forget it!

It won’t perform well and is not scalable at all.

Note that having a load balancer that is able to maintain affinity based on SSL session ID is really important. You can understand why now.

3.) Bear in mind that the asymmetric key size may have a huge impact on performance.

Of course, the bigger the asymmetric key size is, the harder it will be for an attacker to break the generated symmetric key.

4.) Stud is young but seems promising.

By the way, the stud has included HAProxy Technologies patches from @emericbr, so if you use a recent stud version, you may have the same result as us.

5.) If we consider that your user would renegotiate every 100 requests and that the average object size you want to encrypt is 4K, you could get 2300 SSL transactions per second on a small Intel Atom @1.66GHZ!!!!

Imagine what you could do with a dual CPU core i7!!!

By the way, we’re glad that the stud developers have integrated our patches into the main stud branch.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.