High levels of web traffic can lead to network congestion, often causing network latency, and in some cases, outages. Traffic shaping is a technique that can help prevent network congestion by controlling how much traffic enters and leaves a network.

We debuted traffic shaping in the release of HAProxy 2.7 and HAProxy Enterprise 2.7, allowing our users to control client upload and download speeds. Users are now able to limit the data transmitted to a server or a client to a maximum amount of bytes over a specific period. By using traffic shaping, you can avoid network traffic issues.

In this blog post, we discuss what traffic shaping is and how you can implement it in HAProxy and HAProxy Enterprise using our bandwidth limitation filter.

What is Traffic Shaping & When Should You Use It?

Traffic shaping allows you to control the bandwidth of data flowing into and out of your load balancers. As “traffic shaping” suggests, it provides you the ability to shape traffic, limiting the amount of bandwidth available to certain streams, to ensure that bandwidth is not overused and does not become congested.

With traffic shaping, you can limit the maximum download speed of files regardless of a client’s connection speed. You can also limit the maximum upload speed. By implementing traffic shaping, you can ensure that:

critical applications always have sufficient bandwidth;

certain traffic is prioritized (e.g., video streaming traffic); and

there is no network latency, guaranteeing a smooth experience for clients.

Traffic shaping is not to be confused with traffic policing. While both are techniques used to manage and control the flow of data into a network, they are suitable for different scenarios. While traffic shaping involves delaying HTTP requests and responses when bandwidth consumption exceeds specified limits, traffic policing involves dropping traffic under extreme conditions.

HAProxy’s Solution for Traffic Shaping: The Bandwidth Limitation Filter

Traffic shaping with HAProxy is made possible by the bandwidth limitation filter.

The bandwidth limitation filter is a filter that can be applied to web traffic flowing through a network using HAProxy’s Filters API. With the bandwidth limitation filter, you can prevent network congestion by setting bandwidth limits to shape traffic.

The bandwidth limitation filter was fully realized with the release of HAProxy 2.7 and is also available for HAProxy Enterprise 2.7 and HAProxy ALOHA 15 customers.

Overview of HAProxy’s Filters API

HAProxy Technologies’ Filters API enables you to manage web traffic through the application of filters. Essentially, it’s a way to extend HAProxy to analyze and process traffic.

Filters work at the data level, also known as the stream level, operating on top of the connection and session levels. Filters are able to modify web traffic and can be chained and executed in specific orders. The execution order can have a direct impact on results, with variations in data at the outputs and inputs of filters.

Some examples of filters include HTTP compression, HTTP cache, and SPOE.

Read more:

Using the Bandwidth Limitation Filter for Traffic Shaping

The bandwidth limitation filter limits the size of data sent over a stream to a maximum bandwidth and can be applied to both TCP and HTTP protocols. However, the filter is only concerned with the data that is sent, not received.

The filter can be applied to the frontend and backend sections. Several of these limitations can be configured in the same section and enabled at the same time on a stream.

Filter Type: Limit Incoming Data

The limit incoming data filter limits incoming data flowing into the load balancer. The filter restricts the amount of data that can be transferred over a stream and is often used when there are limited resources available to process the data, or when administrators want to avoid network congestion and availability issues.

Filter Scope: Per-stream Limitation

This filter scope limits bandwidth for incoming data for each individual stream. The number of other clients accessing the network does not affect the limit, nor does connection speed or the number of connections that are open.

This filter scope is useful when the goal is to ensure a consistent level of bandwidth consumption across each individual stream. For example, per-stream limitation can be implemented to control the amount of data being sent to a server through file uploads. High volumes of simultaneous uploads can cause network congestion, and per-stream limitation may prevent this from occurring by limiting each stream to a maximum amount of bandwidth

| filter bwlim-in <name> default-limit <size> default-period <time> [min-size <sz>] |

Filter Scope: Shared Limitation

This filter scope is a collective approach to limiting bandwidth for incoming data. With shared limitation, the total bandwidth available is shared across all streams. Each stream will receive a share of the bandwidth, ensuring server resources are used fairly and do not collectively exceed a specified amount.

This filter scope should be used when you want to limit resource usage across all clients. Most notably, if a network has limited bandwidth, this filter is effective in protecting what is available from being completely consumed.

| filter bwlim-in <name> limit <size> key <pattern> [table <table>] [min-size <sz>] |

Filter Type: Limit Outgoing Data

While the previous filter type limited incoming data, this filter type restricts the rate of data that can be sent to the client. Its purpose in preserving resources and reducing network congestion is the same.

Filter Scope: Per-stream Limitation

Similar to per-stream limitation for incoming data, this scope limits outgoing data for individual streams. Each stream will have its own limitation unaffected by streams of the same client or other clients.

For example, per-stream limitation can be used for video streaming to limit large volumes of outgoing data on a per-stream basis. Using this scope can prevent a single stream from consuming too much bandwidth and negatively impacting the experience of other users.

| filter bwlim-out <name> default-limit <size> default-period <time> [min-size <sz>] |

Filter Scope: Shared Limitation

The shared limitation scope limits outgoing data collectively across all streams. This means all data being sent from the server to any number of clients shares the same amount of available bandwidth.

This scope ensures the total bandwidth limit is never exceeded and servers always have resources available, regardless of the number of connections. When your servers are running multiple applications handling different forms of outgoing data, contention for bandwidth can be high. Shared limitation can ensure all applications do not exceed the shared bandwidth.

| filter bwlim-out <name> limit <size> key <pattern> [table <table>] [min-size <sz>] |

Configuring & Enabling the Bandwidth Limitation Filter

There are two steps to setup a bandwidth limitation filter:

Configure the filter itself

Add TCP/HTTP rules to enable the filter on streams.

How to Configure a Bandwidth Limitation Filter

To configure a bandwidth limitation filter:

Choose the type of bandwidth limitation filter you want to use: bwlim-in for incoming data and bwlim-out for outgoing data. One filter cannot limit both incoming and outgoing traffic.

Choose a name to identify your filter. The name will be used as a reference in TCP/HTTP rules.

Choose a scope for your filter. The limitation can be applied per-stream or it can be shared by several streams. Details on these two modes are explained below.

Finally, you must set up the limitation itself (i.e., the maximum number of bytes that can be transmitted over a given period of time). It is an average value. Depending on the filter’s scope and the implementation choices, small bursts on short time periods may be observed.

You may also specify the minimum number of bytes to forward at a time. If something is transferred, it will never be less than this value, except for the last packet that may be smaller.

The minimum number of bytes must be carefully defined. If the value is too small, it can increase the CPU usage. If the value is too high, it can increase the latency. Also, if the value is too close to the bandwidth limit, some pauses may be experienced in order to not exceed the limit due to too many bytes being consumed at a time. This is highly dependent on the filter configuration.

We recommend starting with something around 2 TCP MSS, typically 2896 bytes, and tune it after some experimentation.

How to Enable a Bandwidth Limitation Filter

When you have configured a bandwidth limitation filter, it is inactive until you enable it via a TCP or an HTTP rule, using its limitation name:

| tcp-request content set-bandwidth-limit <name> ... [ { if | unless } <condition> ] | |

| tcp-response content set-bandwidth-limit <name> ... [ { if | unless } <condition> ] | |

| http-request set-bandwidth-limit <name> ... [ { if | unless } <condition> ] | |

| http-response set-bandwidth-limit <name> ... [ { if | unless } <condition> ] |

Examples of Configuring and Enabling a Bandwidth Limitation Filter

Here are two basic examples:

The limitation named upload-per-stream is configured to apply a default upload limit of 100KB/s to a stream. It is enabled on all requests to the admin API.

| frontend http | |

| filter bwlim-in upload-per-stream default-limit 100K default-period 1s | |

| http-request set-bandwidth-limit upload-per-stream if { path_beg /admin } | |

| # ... |

The limitation named download-per-src is configured to apply a download limit of 1MB/s to a source IP address, regardless of the number of connections it uses. It is enabled if it exceeds 20 requests in 10s.

| frontend http | |

| filter bwlim-out download-per-src key src table down-per-src limit 1m | |

| stick-table type ip size 1m expire 30m store gpc0,http_req_rate(10s) | |

| http-request track-sc0 src | |

| http-response set-bandwidth-limit download-per-src if { sc_http_req_rate(0) gt 20 } | |

| # ... | |

| backend down-by-src | |

| # The stickiness table used by <dowload-per-src> filter | |

| stick-table type ip size 1m expire 1h store bytes_out_rate(1s) |

The bandwidth limitation is only available at the stream level. Thus, it cannot be enabled with TCP connection or session rules.

Important Things To Know About Using the Bandwidth Limitation Filter

Before going into details on the types of bandwidth limitation (per-stream limitation and shared limitation), there are some points to keep in mind:

The filter declaration order is important and defines the order in which limitations are applied, independently of the activation order.

Regardless of its type (-in or -out), a limitation can be enabled from a request or a response rule. However, if a filter limiting uploads is enabled during the response analysis, client data transferred while waiting for the response will not be counted.

Once enabled, a bandwidth limitation cannot be disabled.

For TCP streams, the limitation is applied on all transferred data. But for HTTP streams, the limitation is applied only to the payload; HTTP headers are not considered.

The bandwidth limitation is applied at the application level. It does not change how data is handled at lower levels. Data can be subject to flow control or can be encapsulated or encoded (e.g., data transferred through HTTP/2 or SSL connections). In the end, this can result in a transfer rate that does not reflect the bandwidth limitation applied at the higher level.

The bandwidth limitation can be mixed with other filters, notably HTTP compression. Depending on the order of those filters, the result will be different. You can choose to enable the compression first or not, but it must be done explicitly, ordering your

filterdirectives.

Per-stream Bandwidth Limitation

Per-stream bandwidth limitation is the easiest mode to understand. When enabled, the limit is only applied on the current stream, independently of all the other ones. It is also pretty flexible because the limit can be dynamically customized for each stream when it is enabled.

When the filter is configured, you must define the default bandwidth limit, filling default-limit and default-period parameters.

| filter bwlim-in <name> default-limit <size> default-period <time> [min-size <sz>] | |

| filter bwlim-out <name> default-limit <size> default-period <time> [min-size <sz>] |

When you have configured the bandwidth limitation filter, you must enable it via TCP/HTTP rules. The activation can be guarded with an ACL.

Configuring a Dynamic Per-Stream Bandwidth Limit

For a per-stream bandwidth limitation, it is possible to override the default settings by redefining the limit and/or the period. You can specify static values but you can also use sample expressions. This makes the limit fully adaptable to each stream.

For a streamlined workflow, you can set up a generic per-stream bandwidth limitation with some exceptions instead of defining multiple filters. For example, for a streaming platform, you could set up one bandwidth limitation filter that accommodates several limits depending on video resolutions:

| frontend primeflix | |

| filter bwlim-out video-streaming default-limit 320k default-period 1s # 720p by default | |

| # Detect the resolution by matching on the request path | |

| http-request set-var(txn.resolution) int(360) if { path_beg /360p } | |

| http-request set-var(txn.resolution) int(480) if { path_beg /480p } | |

| http-request set-var(txn.resolution) int(720) if { path_beg /720p } | |

| http-request set-var(txn.resolution) int(1080) if { path_beg /1080p } | |

| http-request set-var(txn.resolution) int(4000) if { path_beg /4k } | |

| acl is_mp4 res.hdr(content-type) -m beg video/mp4 | |

| acl is_360p var(txn.resolution) -m int 360 | |

| acl is_480p var(txn.resolution) -m int 480 | |

| acl is_720p var(txn.resolution) -m int 720 | |

| acl is_1080p var(txn.resolution) -m int 1080 | |

| acl is_4k var(txn.resolution) -m int 4000 | |

| http-response allow if !is_mp4 # Only set a bandwidth limit for mp4 video | |

| http-response set-bandwidth-limit video-streaming # Set the default limit 720p => 320KB/s | |

| http-response set-bandwidth-limit video-streaming limit 90K if is_360p # Override default limit 320p => 90KB/s | |

| http-response set-bandwidth-limit video-streaming limit 140K if is_480p # Override default limit 480p => 140K/s | |

| http-response set-bandwidth-limit video-streaming limit 625K if is_1080p # Override default limit 1080p => 625KB/s | |

| http-response set-bandwidth-limit video-streaming limit 2500K if is_4k # Override default limit 4K => 2.5MB/s | |

| # ... |

With this kind of limitation, you might observe a burst of (at most) one buffer at the beginning or after a long pause, followed by a stalled period as a side effect of the burst. It is especially visible if the limit is small. It is even more visible if the period is large. However, most of the time, it should be negligible because the limits are large enough.

To illustrate this behavior, here is a basic configuration of an HTTP listener with a default download limit defined to 100MB/s. Depending on the request path, it will be dynamically changed:

10 KBytes per 10 seconds if the path begins with /limit1

10 MBytes per 10 seconds if the path begins with /limit2

1 MBytes per second if the path begins with /limit3

The default limit for all other paths.

| listen http | |

| bind *:80 | |

| mode http | |

| filter bwlim-out my-limit default-period 1s default-limit 100m | |

| http-request set-bandwidth-limit my-limit # Enable the limit for everyone | |

| http-request set-bandwidth-limit my-limit limit 10k period 10s if { path_beg /limit1 } # Override it to 10KB per 10s for requests to /limit1 | |

| http-request set-bandwidth-limit my-limit limit 10m period 10s if { path_beg /limit2 } # Override it to 10MB per 10s for requests to /limit2 | |

| http-request set-bandwidth-limit my-limit limit 1m period 1s if { path_beg /limit3 } # Override it to 1MB per 1s for requests to /limit3 | |

| server www a.b.c.d:80 |

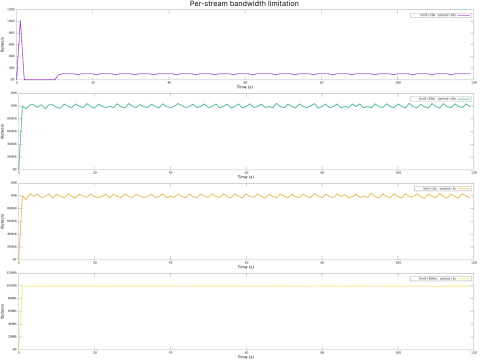

Results

Here are typical graphs of the bandwidth for clients hitting one of these limits.

On the first graph, the client quickly hits its limit over the first period because the limit is low. It stalled for the rest of this period.

Shared Bandwidth Limitation

We might want to limit the bandwidth consumed by a group of streams sharing common criteria. This is referred to as shared bandwidth limitation.

The most common implementation of shared bandwidth limitation is limiting the bandwidth consumed by a client ip address, ensuring a client does not consume all the bandwidth, regardless of its number of established connections. In this case, you must configure the bandwidth limitation filter to use a shared limit.

This mode takes longer to configure because it relies on a stick table to enforce a limit divided equally between all streams sharing the same entry in the table.

Configure the Stick Table

The first step is to configure the stick table. Depending on the limit type, the table must be configured to store the corresponding bytes rate information: bytes_in_rate(<period>) counter to limit transfer to servers, and bytes_out_rate(<period>) to limit transfer to clients.

For instance, to limit clients’ download bandwidth based on their source IP address, the following table must be defined:

| backend limit-by-src | |

| stick-table type ipv6 size 1m expire 3600s store bytes_out_rate(1s) |

It is not mandatory to define it in a dedicated backend. You can define it in the proxy, defining the bandwidth limitation filter itself.

Configure the Bandwidth Limitation Filter to use the Stick Table

Then, configure the bandwidth limitation filter to use the stick table:

| filter bwlim-in <name> limit <size> key <pattern> [table <table>] [min-size <sz>] | |

| filter bwlim-out <name> limit <size> key <pattern> [table <table>] [min-size <sz>] |

The type must match the counter type of the stick table.

Specify the stick table, or leave it unspecified to use the default, which is the stick table declared in the current proxy.

Define the limit. Unlike per-stream bandwidth limitation, there is no default limit. It cannot be dynamically redefined, and it must be set using the limit parameter.

Define the key expression to use to select an entry in the stick table.

Based on the previous example, we can limit the bandwidth per source IP address with the following configuration:

| listen http | |

| bind *:80 | |

| mode http | |

| filter bwlim-out limit-per-src key src,ipmask(32,64) table limit-by-src limit 1m min-size 2896 | |

| http-response set-bandwidth-limit limit-by-src | |

| server www a.b.c.d:80 | |

| backend limit-by-src | |

| stick-table type ipv6 size 1m expire 3600s store bytes_out_rate(1s) |

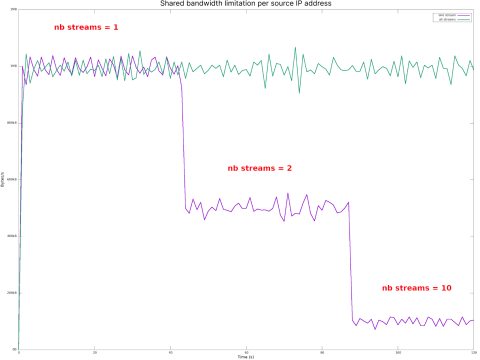

Results

All streams with the same IPv4 or the same/64 IPv6 address block will share the same limit. It will be divided equally between all these streams.

On this graph, the bandwidth for a given client is limited to 1MB/s, whether it has one, two, or ten streams. The bandwidth for a stream is around 1MB/s if there is only one stream, around 500KB/s with two streams, and around 100KB/s with ten streams.

With this kind of limitation, it is also possible to enforce a global limit, for instance, for a frontend, a backend, or a server. With the following configuration, the upload bandwidth is globally limited to 100MB/s for the frontend TCP, divided equally by all clients connected to port 1234:

| frontend tcp | |

| bind *:1234 | |

| mode tcp | |

| filter bwlim-in global-up-limit key fe_nname table limit-by-front limit 100m min-size 2896 | |

| tcp-request content set-bandwidth-limit global-up-limit | |

| # ... | |

| backend limit-by-front | |

| stick-table type string len 64 size 10 expire 3600s store bytes_in_rate(1s) |

Conclusion

The bandwidth limitation filter is a simple and powerful tool for shaping traffic. HAProxy users and HAProxy Enterprise customers can implement per-stream or shared limitations or both at the same time.

We expect the bandwidth limitation filter to evolve further, providing our users with more tools to manage the flow of traffic into and out of their infrastructure. Stay tuned for more information.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.