Detect and stop fake web crawlers using HAProxy Enterprise’s Verify Crawler add-on.

How your website ranks on Google can have a substantial impact on the number of visitors you receive, which can ultimately make or break the success of your online business. To keep search results fresh, Google and other search engines deploy programs called web crawlers that scan and index the Internet at a regular interval, registering new and updated content. Knowing this, it’s important that you welcome web crawlers and never impede them from carrying out their crucial mission.

Unfortunately, malicious actors deploy their own programs that masquerade as search engine crawlers. These unwanted bots scrape and steal your content, post spam on forums, and feed intel back to competitors, all while claiming to be innocent Googlebot. As it turns out, impersonating a crawler is not difficult. Every web request comes with a piece of metadata called a User-Agent header that describes the browser or other program that made the request.

For example, when I visit a website, the following User-Agent value is sent along too:

User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:80.0) Gecko/20100101 Firefox/80.0

From this, you can gather that I am using Firefox, that its version is 80.0, and that I am using the Linux operating system. When Googlebot makes a request, its User-Agent value looks like this:

Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)

In many cases, all that a malicious user needs to do is have their bot send Googlebot’s User-Agent string since many website operators give special clearance to programs presenting that identity. Some of these fake bots have been reported to even act like Googlebot, crawling your site in a similar fashion to further avoid detection. What can you do to stop them?

HAProxy Enterprise Bot Management

The HAProxy Enterprise load balancer has yet another weapon in the fight against bad bots. Its Verify Crawler add-on will check the authenticity of any client that claims to be a web crawler and let you enforce any of the available response policies against those it categorizes as phony. Verify Crawler lets you stop fake web crawlers without bothering legitimate search engine bots.

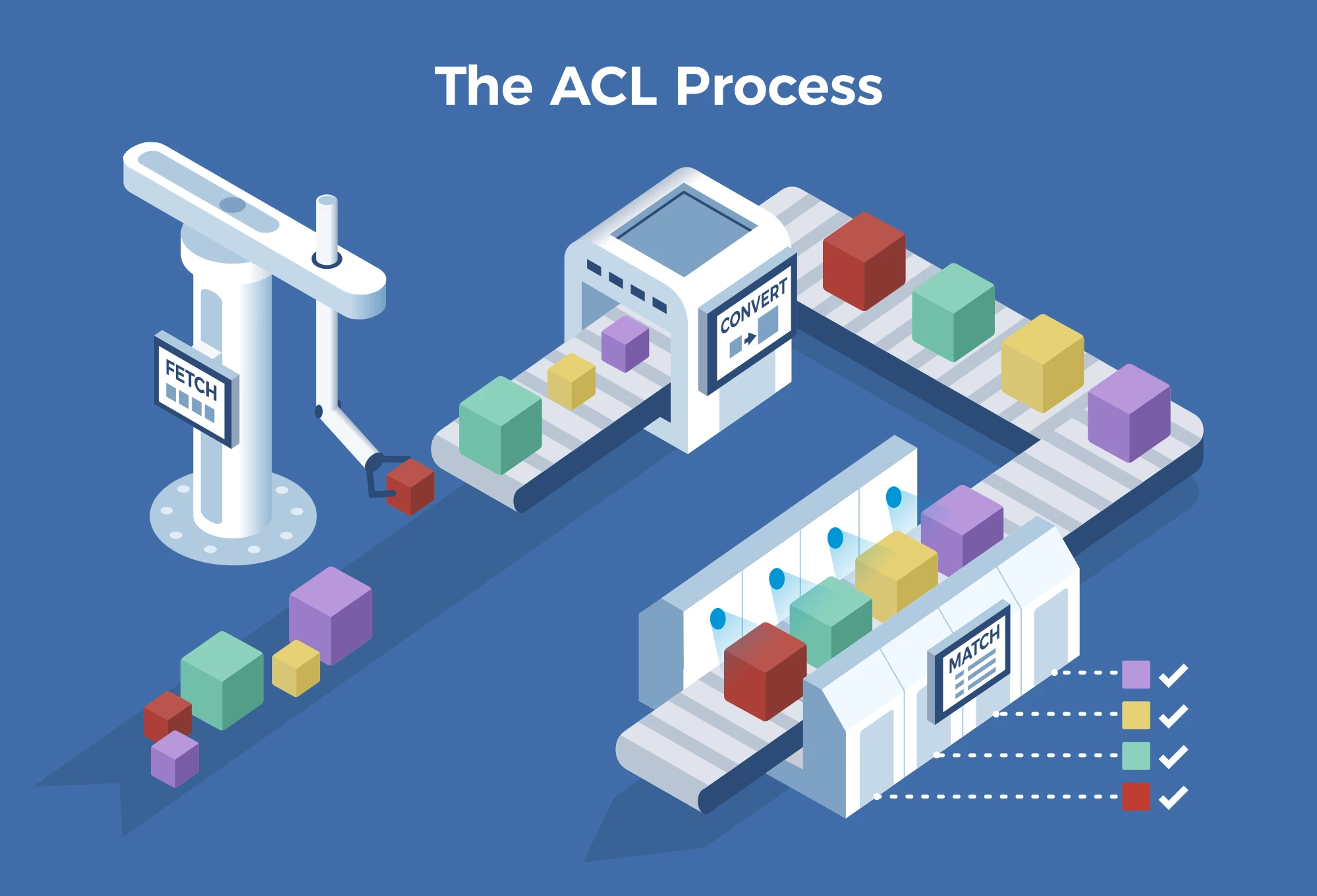

Verify Crawler does not block the flow of traffic while it checks a supposed search engine crawler. Instead, it performs its verification process in the background and then puts the result (valid/invalid) into an in-memory record that HAProxy Enterprise will consult the next time it sees the same client make a request. The steps follow the procedure recommended by Google.

How it works:

When HAProxy Enterprise receives a request from a client, it checks whether the given User-Agent value matches any known search engine crawlers (e.g. BingBot, GoogleBot). If so, it tags that client as needing verification.

Verify Crawler runs in the background and polls for the latest list of unverified crawlers.

For each record, it does a reverse DNS lookup of the client’s source IP address to see if it resolves to the search engine’s domain, such as googlebot.com. If it does, it has passed the first test.

The verifier performs another test: It does a forward DNS lookup of the search engine’s domain name (e.g. googlebot.com) to get a list of IP addresses used for that domain. The client’s IP address must be in this list, otherwise, it fails the second test.

The verifier finishes by storing the client’s IP address and status—valid or not valid—so that HAProxy will remember if this client is a legitimate web crawler the next time it receives a request from it.

Of course, these steps could be performed manually, but allowing HAProxy Enterprise to do it automatically for each unverified bot will save you huge amounts of time and labor, especially since Google sometimes changes the IP addresses it uses. It also fits in with the other bot management mechanisms offered with HAProxy Enterprise to give you multiple layers of defense against various types of malicious behavior. HAProxy Enterprise is equipped with several other measures, including:

Real-Time Cluster-Wide Tracking, which deploys behavior analysis across your cluster, and Access Control Lists (ACLs), which are pattern-matching rules, that together allow you to track requests from the same client and discover patterns of anomalous behavior, such as web scraping or login brute forcing attempts. Learn more about this in our blog post Bot Protection with HAProxy.

The Javascript Challenge module forces bots to solve dynamically changing math problems, which many scripted bots are simply unable to do. You can use this when your site is under active attack to filter out the unwanted traffic before it reaches your backend servers.

The reCAPTCHA module presents a Google reCATPCHA challenge to suspected bots, which stops them, but not human users. A reCAPTCHA displays an image and asks the user to identify certain objects they see.

The Fingerprint module uses multiple data points to accurately identify a returning client, regardless of whether they change aspects of their requests, such as rotating User-Agent values.

Together, these countermeasures keep your website and applications safe from a range of bot threats, but without compromising the quality of the experience regular users get.

Protect Your Website & Applications from Bad Bots

Verify Crawler comes included with HAProxy Enterprise and is yet another way to protect your website and applications from bad bots. Impersonating Googlebot or other search engine crawlers has been an easy way for attackers to evade detection until now, with few website operators having the time or resources to combat it. However, you can leverage HAProxy Enterprise to perform validation on your behalf.

HAProxy Enterprise is the world’s fastest and most widely used software load balancer. It powers modern application delivery at any scale and in any environment, providing the utmost performance, observability, and security. Organizations harness its cutting-edge features and enterprise suite of add-ons, backed by authoritative expert support and professional services. Ready to learn more? Contact us and sign up for a free trial.

Want to stay up to date on similar topics? Subscribe to this blog! You can also follow us on Twitter and join the conversation on Slack.

Note: While working on this blog post, coincidentally, a thread was started on the mailing list around this subject. You can follow the discussion here.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.