Run your HAProxy Kubernetes Ingress Controller in External mode to reduce network hops and latency.

Join our webinar to learn more about running the ingress controller in external mode.

Traditionally, you would run the HAProxy Kubernetes Ingress Controller as a pod inside your Kubernetes cluster. As a pod, it has access to other pods because they share the same pod-level network. That allows it to route and load balance traffic to applications running inside pods, but the challenge is how to connect traffic from outside the cluster to the ingress controller in the first place.

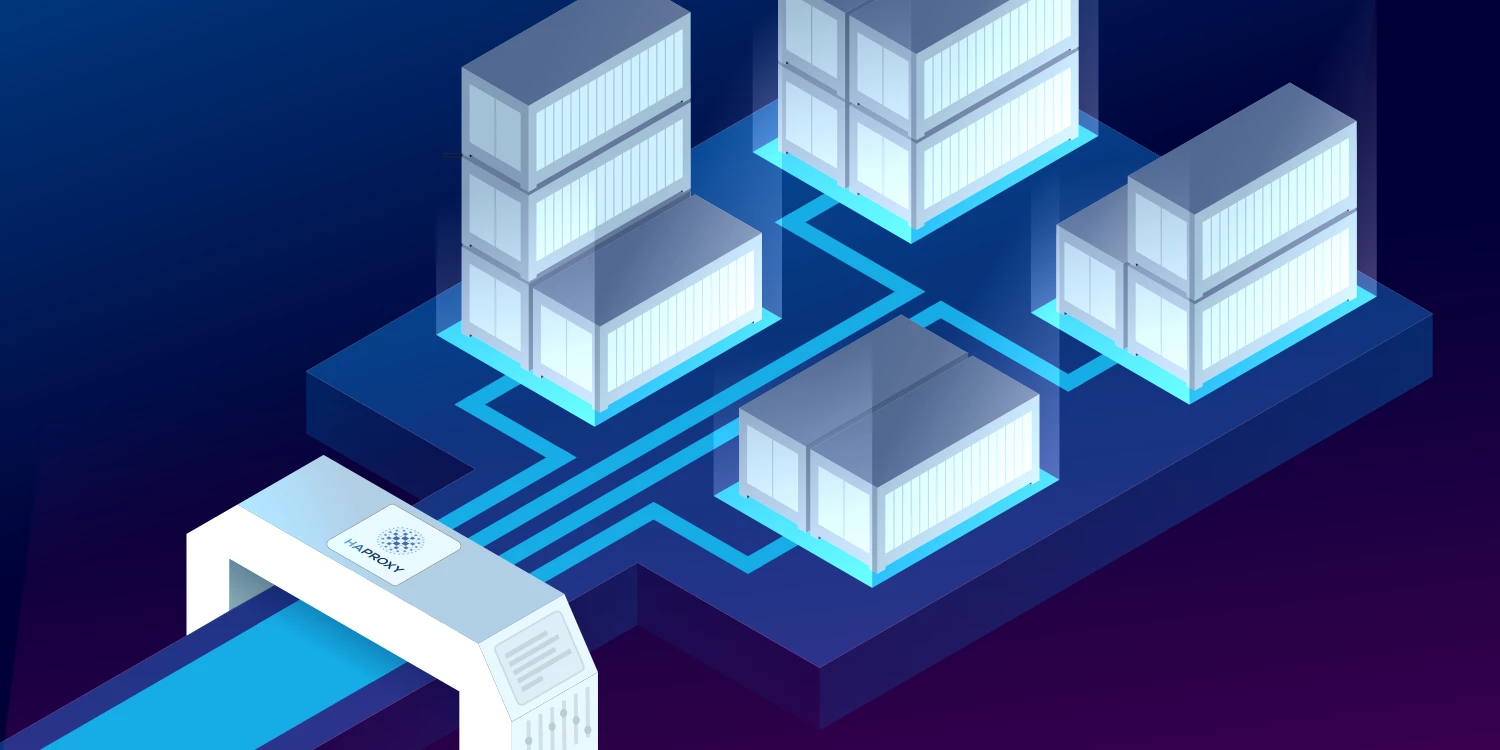

Like other pods, the ingress controller lives inside the walled environment of Kubernetes, so clients outside can’t reach it unless you expose it as a Service. A Kubernetes Service creates a bridge to the outside world when you set its type to either NodePort or LoadBalancer. In cloud environments, the LoadBalancer option is more common, since it instructs the cloud provider to spin up one of its cloud load balancers (e.g. AWS Network Load Balancer) and place it in front of the ingress controller. Administrators of on-premises Kubernetes installations typically use NodePort and then manually put a load balancer in front. So, in nearly every case, you end up with a load balancer in front of your ingress controller, which means that there are two layers of proxies through which traffic must travel to reach your applications.

Looking for a deeper discussion of this topic? Watch our on-demand webinar, Kubernetes Ingress: Routing and Load Balancing HTTP(s) Traffic.

The traditional layout, wherein an external load balancer sends traffic to one of the worker nodes and then Kubernetes relays it to the node that’s running the ingress controller pod, looks like this:

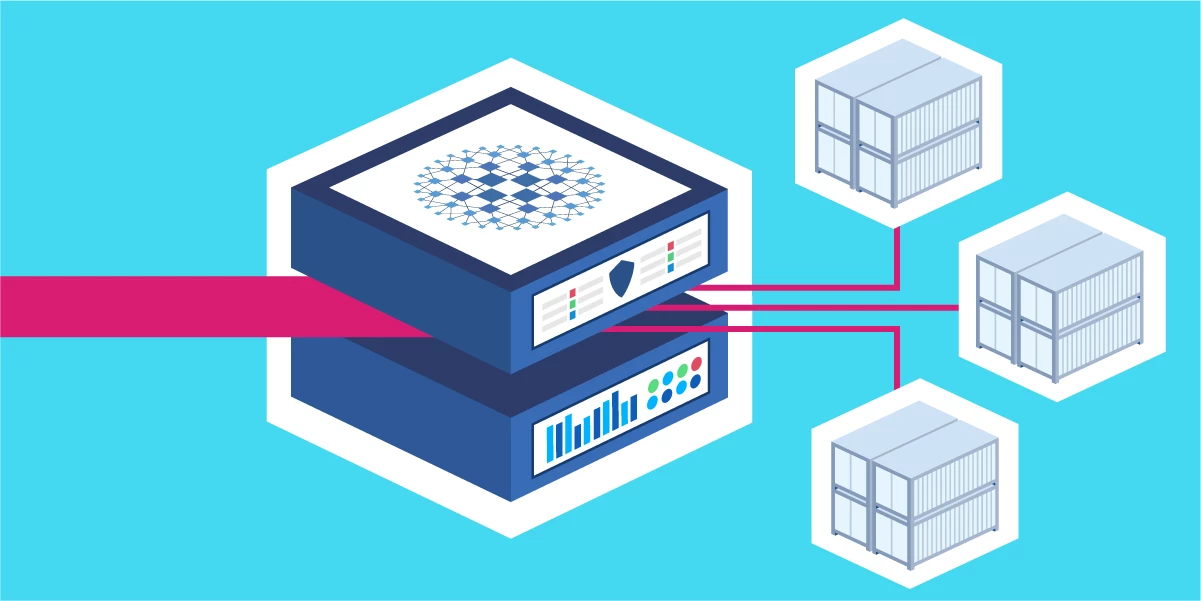

Beginning with version 1.5 of the HAProxy Kubernetes Ingress Controller, you have the option of running it outside of your Kubernetes cluster, which removes the need for an additional load balancer in front. It gets direct access to your physical network and becomes routable to external clients. The caveat is that now that the ingress controller is not running as a pod and is not inside the Kubernetes cluster, it needs access to the pod-level network in some other way.

In this blog post, we solve that problem by installing Project Calico as the network plugin in Kubernetes and then enabling Calico to share its network routes with the ingress controller server via BGP peering. In a production environment, this BGP peering would be to a Layer 3 device in your network, but for this demonstration, we use the BIRD internet routing daemon as a router, installed onto the same VM as the ingress controller.

The diagram below describes this layout, wherein the ingress controller sits outside of the Kubernetes cluster and uses BIRD to peer with the pod-level network:

To follow along with the examples, download the demo project from GitHub. It uses VirtualBox and Vagrant to create a test lab that has four virtual machines. One VM runs the ingress controller and BIRD while the other three form the Kubernetes cluster. Once downloaded, call vagrant up from within the project’s directory to create the VMs.

We’ll step through how we run the HAProxy Kubernetes Ingress Controller externally and how to set up the Kubernetes cluster with Calico for networking.

The Kubernetes Cluster

The demo project uses Vagrant and VirtualBox to create four virtual machines on your local workstation. Three of them constitute the Kubernetes cluster:

one control plane node, which is the brain of the cluster;

two worker nodes where pods are run.

Vagrant automates much of the setup by calling a Bash script that runs the required commands to install Kubernetes and Calico. For those of you wondering why we need to install Calico, you must remember that Kubernetes is a modular framework. Several of its components can be swapped out with different tooling as long as those components implement the required interface. Network plugins must implement the Container Network Interface (CNI). There are several popular options, including Project Calico, Flannel, Cilium, and Weave Net, among others. Calico’s BGP peering feature makes it an attractive choice for our specific needs.

Check the project’s setup_kubernetes_control_plane.sh Bash script to see the details of how the control plane node is provisioned, but at a high level, it performs these steps:

Installs Docker.

Installs the

kubeadmandkubectlcommand-line tools.Calls

kubeadm initto bootstrap the Kubernetes cluster and turn this VM into the cluster’s control plane.Copies a kubeconfig file to the Vagrant user’s home directory so that when you SSH into the VM as that user, you can run

kubectlcommands.Installs Calico as the network plugin, with BGP enabled.

Installs

calicoctl, the Calico CLI tool used to finalize the setup.Creates a ConfigMap object in Kubernetes named haproxy-kubernetes-ingress, which the ingress controller requires to be present in the cluster.

The two worker nodes are initialized with a Bash script named setup_kubernetes_worker.sh, which performs the following steps:

Installs Docker.

Installs

kubeadmandkubectl.Calls

kubeadm jointo turn the VM into a worker node and join it to the cluster.

The part that installs Calico on the control plane node is particularly interesting. The script installs the Calico operator first, then creates a Kubernetes object named Installation that enables BGP and assigns the IP address range for the pod network:

| apiVersion: operator.tigera.io/v1 | |

| kind: Installation | |

| metadata: | |

| name: default | |

| spec: | |

| # Configures Calico networking. | |

| calicoNetwork: | |

| bgp: Enabled | |

| # Note: The ipPools section cannot be modified post-install. | |

| ipPools: | |

| - blockSize: 26 | |

| cidr: 172.16.0.0/16 | |

| encapsulation: IPIP | |

| natOutgoing: Enabled | |

| nodeSelector: all() |

Next, it installs the calicoctl command-line tool and uses it to create a BGPConfiguration and a BGPPeer object in Kubernetes.

| apiVersion: projectcalico.org/v3 | |

| kind: BGPConfiguration | |

| metadata: | |

| name: default | |

| spec: | |

| logSeverityScreen: Info | |

| nodeToNodeMeshEnabled: true | |

| asNumber: 65000 | |

| --- | |

| apiVersion: projectcalico.org/v3 | |

| kind: BGPPeer | |

| metadata: | |

| name: my-global-peer | |

| spec: | |

| peerIP: 192.168.50.21 | |

| asNumber: 65000 |

The BGPConfiguration object sets the level of logging for BGP connections, enables the full mesh network mode, and assigns an Autonomous System (AS) number to Calico. The BGPPeer object is given the IP address of the ingress controller virtual machine where BIRD will be running and sets the AS number for the BIRD router. I’ve chosen the AS number 65000. This is how Calico will share its pod-level network routes with BIRD so that the ingress controller can use them.

The Ingress Controller and BIRD

In the demo project, we install the HAProxy Kubernetes Ingress Controller and BIRD onto the same virtual machine. This VM sits outside of the Kubernetes cluster where BIRD receives the IP routes from Calico and the ingress controller uses them to relay client traffic to pods.

Vagrant calls a Bash script named setup_ingress_controller.sh to perform the following steps:

Installs HAProxy, but disables it as a service.

Calls the

setcapcommand to allow HAProxy to listen on the privileged ports 80 and 443.Downloads the HAProxy Kubernetes Ingress Controller binary and copies it to /usr/local/bin.

Configures a Systemd service for running the ingress controller.

Copies a kubeconfig file to the root user’s home directory, which the ingress controller requires to access the Kubernetes cluster for detecting Ingress objects and changes to services.

Installs BIRD.

The ingress controller should be running already because it is configured as a Systemd service. Its service file executes the following command:

| /usr/local/bin/haproxy-ingress-controller \ | |

| --external \ | |

| --configmap=default/haproxy-kubernetes-ingress \ | |

| --program=/usr/sbin/haproxy \ | |

| --disable-ipv6 \ | |

| --ipv4-bind-address=0.0.0.0 \ | |

| --http-bind-port=80 |

The --external argument is what allows the ingress controller to run outside of Kubernetes. Already, it can communicate with the Kubernetes cluster and populate the HAProxy configuration with server details because it has the kubeconfig file at /root/.kube/config. However, requests will fail until we make the 172.16.0.0/16 network routable.

Calico is equipped to peer with BIRD so that it can share information about the pod network. BIRD then populates the route table on the server where it’s running, which makes the pod IP addresses routable for the ingress controller. Before showing you how to configure BIRD to receive routes from Calico, it will help to see how routes have been assigned on the Kubernetes side.

Calico & BGP Peering

To give you an idea about how this works, let’s take a tour of the demo environment. The virtual machines have been assigned the following IP addresses within the 192.168.50.0/24 network:

ingress controller node = 192.168.50.21

control-plane node = 192.168.50.22

worker 1 = 192.168.50.23

worker 2 = 192.168.50.24

First, SSH into the Kubernetes control plane node using the vagrant ssh command:

| $ vagrant ssh controlplane |

Call kubectl get nodes to see that all nodes are up and ready:

| $ kubectl get nodes | |

| NAME STATUS ROLES AGE VERSION | |

| controlplane Ready control-plane,master 4h7m v1.21.1 | |

| worker1 Ready <none> 4h2m v1.21.1 | |

| worker2 Ready <none> 3h58m v1.21.1 |

Next, call calicoctl node status to check which VMs are sharing routes via BGP:

| $ sudo calicoctl node status | |

| Calico process is running. | |

| IPv4 BGP status | |

| +---------------+-------------------+-------+----------+--------------------------------+ | |

| | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | | |

| +---------------+-------------------+-------+----------+--------------------------------+ | |

| | 192.168.50.21 | global | start | 00:10:03 | Active Socket: Connection | | |

| | | | | | refused | | |

| | 192.168.50.23 | node-to-node mesh | up | 00:16:11 | Established | | |

| | 192.168.50.24 | node-to-node mesh | up | 00:20:08 | Established | | |

| +---------------+-------------------+-------+----------+--------------------------------+ |

The last two lines, which have the IP addresses 192.168.50.23 and 192.168.50.24, indicate that the worker nodes that have established a BGP connection are sharing routes with one another. However, the first line, 192.168.50.21, is the ingress controller VM and its connection has been refused because we haven’t configured BIRD yet.

Calico has assigned each of the worker nodes within the Kubernetes cluster a subset of the larger 172.16.0.0/16 network, which is an overlay network on top of the 192.168.50.0/24 network. You can call kubectl describe blockaffinities to see the network ranges that it assigned.

| $ kubectl describe blockaffinities | grep -E "Name:|Cidr:" | |

| Name: controlplane-172-16-49-64-26 | |

| Cidr: 172.16.49.64/26 | |

| Name: worker1-172-16-171-64-26 | |

| Cidr: 172.16.171.64/26 | |

| Name: worker2-172-16-189-64-26 | |

| Cidr: 172.16.189.64/26 |

Here, the first worker was given the range 172.16.171.64/26 and the second was given 172.16.189.64/26. We need to share these routes with BIRD so that:

If a client requests an IP in the range 172.16.171.64/26, they go to worker1.

If a client requests an IP in the range 172.16.189.64/26, they go to worker2.

The Kubernetes control plane will schedule pods onto one of these nodes.

To configure BIRD, SSH into the ingress controller VM:

| $ vagrant ssh ingress |

Edit the file /etc/bird/bird.conf and add a protocol bgp section for each worker node. Notice that the import filter section tells BIRD that it should import only the IP range that’s assigned to that node and filter out the rest.

| router id 192.168.50.21; | |

| log syslog all; | |

| # controlplane | |

| protocol bgp { | |

| local 192.168.50.21 as 65000; | |

| neighbor 192.168.50.22 as 65000; | |

| direct; | |

| import filter { | |

| if ( net ~ [ 172.16.0.0/16{26,26} ] ) then accept; | |

| }; | |

| export none; | |

| } | |

| # worker1 | |

| protocol bgp { | |

| local 192.168.50.21 as 65000; | |

| neighbor 192.168.50.23 as 65000; | |

| direct; | |

| import filter { | |

| if ( net ~ [ 172.16.0.0/16{26,26} ] ) then accept; | |

| }; | |

| export none; | |

| } | |

| # worker2 | |

| protocol bgp { | |

| local 192.168.50.21 as 65000; | |

| neighbor 192.168.50.24 as 65000; | |

| direct; | |

| import filter { | |

| if ( net ~ [ 172.16.0.0/16{26,26} ] ) then accept; | |

| }; | |

| export none; | |

| } | |

| protocol kernel { | |

| scan time 60; | |

| #import none; | |

| export all; # insert routes into the kernel routing table | |

| } | |

| protocol device { | |

| scan time 60; | |

| } |

Restart the BIRD service to apply the changes:

| $ sudo systemctl restart bird |

Let’s verify that everything is working. Call birdc show protocols to see that, from BIRD’s perspective, BGP peering has been established:

| $ sudo birdc show protocols | |

| BIRD 1.6.8 ready. | |

| name proto table state since info | |

| bgp1 BGP master up 23:18:17 Established | |

| bgp2 BGP master up 23:18:17 Established | |

| bgp3 BGP master up 23:18:59 Established | |

| kernel1 Kernel master up 23:18:15 | |

| device1 Device master up 23:18:15 |

You can also call birdc show route protocol to check that the expected pod-level networks match up to the VM IP addresses:

| $ sudo birdc show route protocol bgp2 | |

| BIRD 1.6.8 ready. | |

| 172.16.171.64/26 via 192.168.50.23 on enp0s8 [bgp2 23:18:18] * (100) [i] | |

| $ sudo birdc show route protocol bgp3 | |

| BIRD 1.6.8 ready. | |

| 172.16.189.64/26 via 192.168.50.24 on enp0s8 [bgp3 23:19:00] * (100) [i] |

You can also check the server’s route table to see that the new routes are there:

| $ route | |

| Kernel IP routing table | |

| Destination Gateway Genmask Flags Metric Ref Use Iface | |

| default _gateway 0.0.0.0 UG 100 0 0 enp0s3 | |

| 10.0.2.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s3 | |

| _gateway 0.0.0.0 255.255.255.255 UH 100 0 0 enp0s3 | |

| 172.16.49.64 192.168.50.22 255.255.255.192 UG 0 0 0 enp0s8 | |

| 172.16.171.64 192.168.50.23 255.255.255.192 UG 0 0 0 enp0s8 | |

| 172.16.189.64 192.168.50.24 255.255.255.192 UG 0 0 0 enp0s8 | |

| 192.168.50.0 0.0.0.0 255.255.255.0 U 0 0 0 enp0s8 |

If you go back to the control plane VM and run calicoctl node status, you will see that Calico also detects that BGP peering has been established with the ingress controller VM, 192.168.50.21.

| $ sudo calicoctl node status | |

| Calico process is running. | |

| IPv4 BGP status | |

| +---------------+-------------------+-------+----------+-------------+ | |

| | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | | |

| +---------------+-------------------+-------+----------+-------------+ | |

| | 192.168.50.21 | global | up | 00:32:13 | Established | | |

| | 192.168.50.23 | node-to-node mesh | up | 00:16:12 | Established | | |

| | 192.168.50.24 | node-to-node mesh | up | 00:20:09 | Established | | |

| +---------------+-------------------+-------+----------+-------------+ |

Add an Ingress

With BGP sharing routes between the Kubernetes cluster and the ingress controller server, we’re ready to put our setup into action. Let’s add an Ingress object to make sure that it works. The following YAML deploys five instances of an application and creates an Ingress object:

| apiVersion: apps/v1 | |

| kind: Deployment | |

| metadata: | |

| labels: | |

| run: app | |

| name: app | |

| spec: | |

| replicas: 5 | |

| selector: | |

| matchLabels: | |

| run: app | |

| template: | |

| metadata: | |

| labels: | |

| run: app | |

| spec: | |

| containers: | |

| - name: app | |

| image: jmalloc/echo-server | |

| ports: | |

| - containerPort: 8080 | |

| --- | |

| apiVersion: v1 | |

| kind: Service | |

| metadata: | |

| labels: | |

| run: app | |

| name: app | |

| spec: | |

| selector: | |

| run: app | |

| ports: | |

| - name: port-1 | |

| port: 80 | |

| protocol: TCP | |

| targetPort: 8080 | |

| --- | |

| apiVersion: networking.k8s.io/v1 | |

| kind: Ingress | |

| metadata: | |

| name: test-ingress | |

| namespace: default | |

| spec: | |

| rules: | |

| - host: test.local | |

| http: | |

| paths: | |

| - path: / | |

| pathType: Prefix | |

| backend: | |

| service: | |

| name: app | |

| port: | |

| number: 80 |

The Ingress object configures the ingress controller to route any request for test.local to the application you just deployed. You will need to update the /etc/hosts file on your host machine to map test.local to the ingress controller VM’s IP address, 192.168.50.21.

Deploy the objects with kubectl:

| $ kubectl apply -f app.yaml |

Open test.local in your browser and you will be greeted by the application, which simply prints the details of your HTTP request. Congratulations! You are running the HAProxy Kubernetes Ingress Controller outside of your Kubernetes cluster! You no longer need another proxy in front of it.

To see the harpoxy.cfg file that got generated, open the file /tmp/haproxy-ingress/etc/haproxy.cfg. It generated a backend named default-app-port-1 that contains a server line for each pod running the application. Of course, the IP addresses set on each server line are now routable. You can scale your application up or down and the ingress controller will automatically adjust its configuration to match.

| backend default-app-port-1 | |

| mode http | |

| balance roundrobin | |

| option forwardfor | |

| server SRV_1 172.16.171.67:8080 check weight 128 | |

| server SRV_2 172.16.171.68:8080 check weight 128 | |

| server SRV_3 172.16.189.68:8080 check weight 128 | |

| server SRV_4 172.16.189.69:8080 check weight 128 | |

| server SRV_5 172.16.189.70:8080 check weight 128 |

Conclusion

In this blog post, you learned how to run the HAProxy Kubernetes Ingress Controller externally to your Kubernetes cluster, which obviates the need for running another load balancer in front. This technique is worth a look if you require low latency since it involves fewer network hops, or if you want to run HAProxy outside of Kubernetes for other reasons. You must handle making this setup highly available, though, such as by using Keepalived.

HAProxy Enterprise powers modern application delivery at any scale and in any environment, providing the utmost performance, observability, and security for your critical services. Organizations harness its cutting-edge features and enterprise suite of add-ons, which are backed by authoritative, expert support and professional services. Ready to learn more? Sign up for a free trial.

Want to know when more content like this is published? Subscribe to our blog or follow us on Twitter. You can also join the conversation on Slack.

Subscribe to our blog. Get the latest release updates, tutorials, and deep-dives from HAProxy experts.